Part 2: Data-Level Interventions (Pre-Processing)

Context

Data-level interventions tackle bias at its source—the data itself—preventing unfairness before it becomes encoded in models.

This Part equips you with techniques to transform biased datasets into fairer representations. You'll learn to reshape distributions, reweight samples, and regenerate data points rather than accepting biased inputs as immutable facts. Too often, data scientists treat datasets as fixed constraints rather than malleable materials.

Reweighting methods adjust sample influence based on protected attributes. For instance, a loan approval algorithm might give greater importance to historically underrepresented applicants during training, counteracting representation imbalances that would otherwise skew predictions.

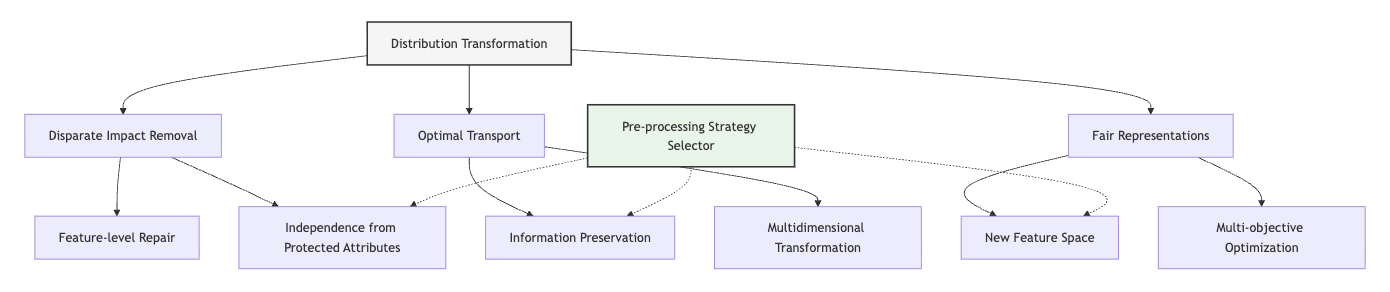

Transformation approaches modify features to break problematic correlations while preserving predictive power. This matters because variables like zip code often serve as proxies for race in US housing contexts, perpetuating redlining effects when left untreated. Transformations can preserve a feature's predictive utility while removing its correlation with protected attributes.

These interventions integrate throughout your ML workflow—from exploratory analysis that reveals representation gaps to validation practices that verify fairness improvements. Techniques range from simple instance weighting to complex generative methods that synthesize balanced datasets.

The Pre-Processing Fairness Toolkit you'll develop in Unit 5 represents the second component of the Fairness Intervention Playbook. This tool will help you select and configure appropriate data transformations for specific bias patterns, ensuring that your models train on fair representations from the start.

Learning Objectives

By the end of this Part, you will be able to:

- Analyze data representation disparities across protected groups. You will detect and quantify imbalances, missing values, and quality differences, enabling targeted interventions that address specific fairness gaps rather than applying generic fixes.

- Implement reweighting techniques that adjust sample importance. You will apply methods from simple frequency-based weights to sophisticated distribution matching, transforming unbalanced datasets into effectively balanced representations without discarding valuable samples.

- Design feature transformations that mitigate proxy discrimination. You will create methods that reduce problematic correlations between features and protected attributes, preserving legitimate predictive signal while removing discriminatory pathways.

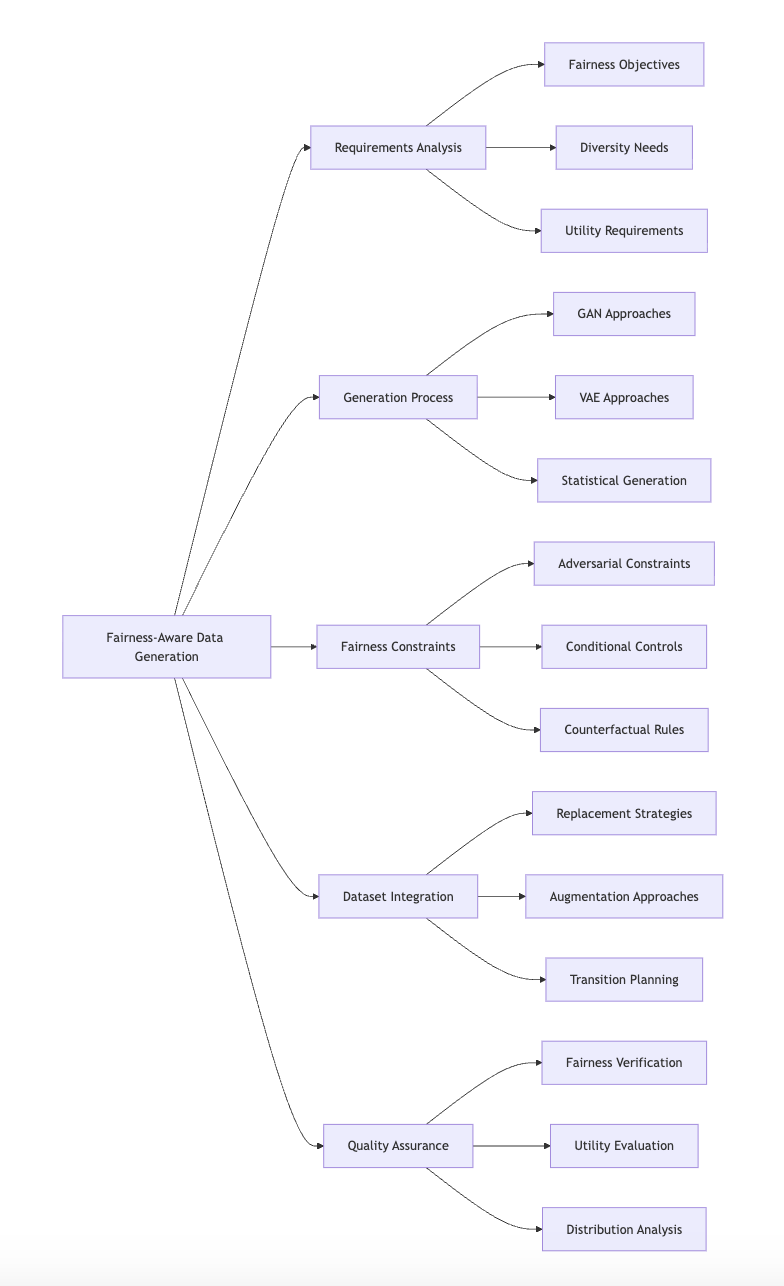

- Develop fairness-aware data augmentation strategies. You will generate synthetic samples for underrepresented groups, moving from limited or imbalanced data to robust representations that support fair model training.

- Evaluate data-level interventions for fairness impact and information preservation. You will create metrics and validation approaches that balance fairness improvements against predictive power, enabling informed decisions about intervention trade-offs in specific contexts.

Units

Unit 1

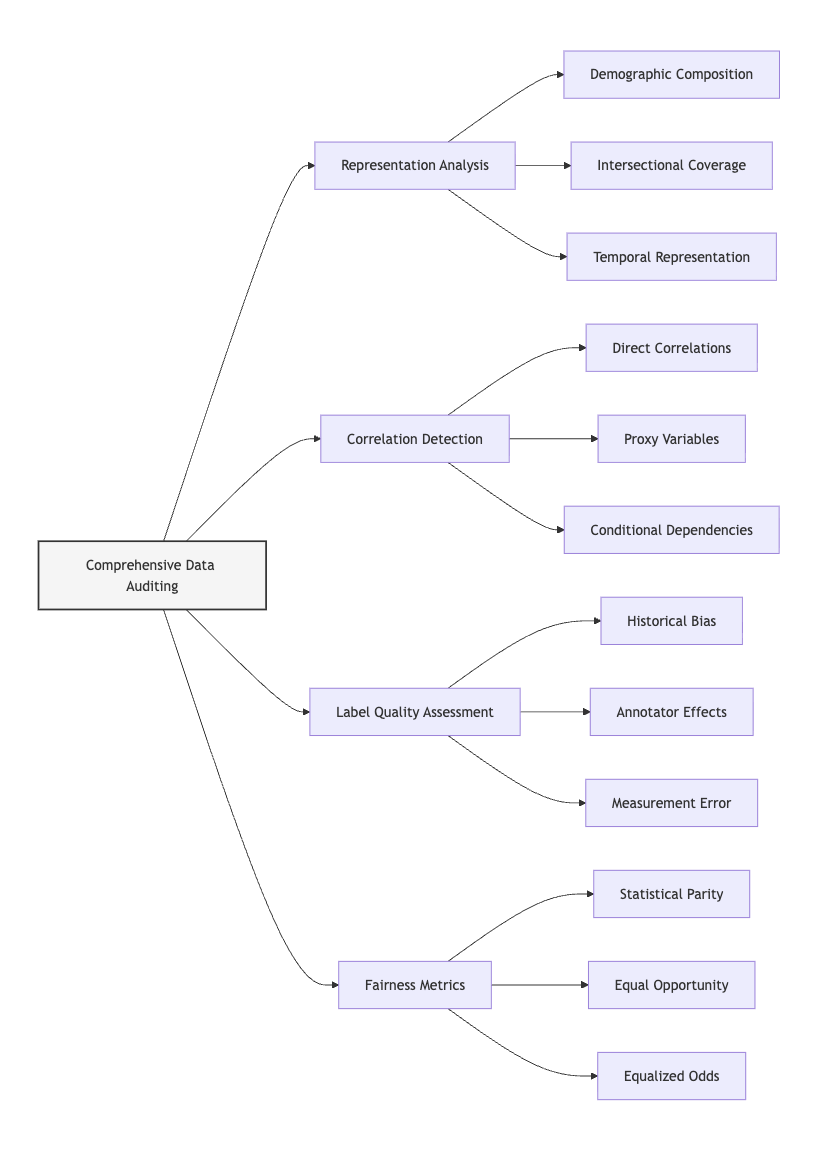

Unit 1: Comprehensive Data Auditing

1. Conceptual Foundation and Relevance

Guiding Questions

- Question 1: How do we systematically uncover and quantify bias patterns in training data before they propagate to machine learning models?

- Question 2: What analytical frameworks enable us to distinguish between different types of data biases, and how can these insights guide appropriate pre-processing interventions?

Conceptual Context

Before applying any fairness intervention, you must first establish a clear understanding of how bias manifests in your training data. Comprehensive data auditing provides the diagnostic foundation for all subsequent fairness work—without it, interventions may target symptoms rather than causes or, worse, introduce new forms of unfairness through misguided corrections.

Data auditing for fairness goes beyond conventional data quality analysis to systematically examine representation disparities, problematic correlations, label quality issues, and other bias patterns that can lead to discriminatory model behavior. As Gebru et al. (2021) note, "Understanding dataset composition is fundamental to understanding how a machine learning model will perform in deployment, particularly when there are fairness concerns" (Gebru et al., 2021).

This Unit builds upon the causal understanding from Part 1, translating causal insights into specific data auditing approaches that identify bias mechanisms. It establishes the analytical foundation for the pre-processing techniques you'll explore in Units 2-4 by determining which types of interventions are most appropriate for specific bias patterns. The comprehensive auditing methodologies you learn here will directly inform the Pre-processing Strategy Selector you'll develop in Unit 5, providing the diagnostic inputs that guide intervention selection.

2. Key Concepts

Multidimensional Representation Analysis

Representation analysis examines whether different demographic groups are adequately and accurately represented in training data. This concept is fundamental to AI fairness because models trained on data with demographic imbalances often perform worse for underrepresented groups, perpetuating and sometimes amplifying existing disparities.

This concept connects to other fairness concepts by establishing the baseline demographic composition that informs subsequent analysis. It intersects with sampling bias detection by quantifying representation gaps, and with label quality assessment by examining whether representation varies across outcome categories.

Conventional data analysis often examines univariate distributions of protected attributes, but multidimensional representation analysis goes further to examine intersectional representation—how well the data represents individuals across combinations of protected attributes. Buolamwini and Gebru's (2018) Gender Shades research demonstrated the critical importance of this approach, revealing that facial recognition datasets had severe representation gaps at the intersection of gender and skin tone that weren't apparent when examining either attribute in isolation.

For example, in a hiring algorithm training dataset, you might find adequate overall representation of women (48%) and adequate representation of people of color (30%), but severe underrepresentation of women of color (only 9% instead of the expected 14.4% if distributions were independent). This intersectional gap creates particular vulnerability to discrimination for this demographic group.

Comprehensive representation analysis includes:

- Comparison of dataset demographics to reference populations (e.g., census data or application-specific benchmarks)

- Identification of representation gaps across protected attributes and their intersections

- Analysis of representation across outcome categories (e.g., positive vs. negative labels)

- Temporal analysis to detect shifts in representation over time

For the Pre-processing Strategy Selector you'll develop in Unit 5, multidimensional representation analysis will serve as a key diagnostic input, helping determine whether reweighting, resampling, or generative approaches are most appropriate for addressing representation disparities.

Correlation and Association Pattern Detection

Beyond representation, data auditing must systematically examine how protected attributes correlate with other features and outcomes. This concept is crucial for AI fairness because these correlations create pathways for both direct and proxy discrimination, even when protected attributes are explicitly excluded from models.

This correlation analysis builds on representation assessment by examining relationships rather than just frequencies. It interacts with fairness interventions by identifying which specific correlations should be targeted for modification through transformation techniques.

Correlation patterns between protected attributes and other features can reveal proxy discrimination pathways. For instance, Angwin et al. (2016) found that in criminal risk assessment data, variables like "prior police contacts" correlated strongly with race due to historical patterns of over-policing in certain neighborhoods, creating an indirect path for racial discrimination.

When auditing data for fairness, you need to examine:

- Direct correlations between protected attributes and outcomes or labels

- Correlations between protected attributes and ostensibly neutral features

- Higher-order associations (e.g., interactions, conditional dependencies)

- Correlation stability across different data subsets and time periods

Modern data auditing goes beyond simple correlation coefficients to employ techniques like mutual information analysis, permutation-based feature importance, and sensitivity analysis to identify subtle association patterns that may lead to discrimination.

For the Pre-processing Strategy Selector, these correlation analyses will help determine when distribution transformation techniques are necessary to address proxy discrimination rather than just adjusting representation through reweighting or resampling.

Label Quality and Annotation Bias Assessment

Training labels themselves can embed historical discrimination or reflect annotator biases, making label quality assessment a critical component of comprehensive data auditing. This concept is essential for AI fairness because biased labels will lead to discriminatory models regardless of how balanced the underlying feature representation may be.

This concept connects to representation analysis by examining not just who is represented but how they are labeled. It intersects with correlation analysis by focusing specifically on the relationship between protected attributes and outcomes, which is often the most direct manifestation of discrimination.

Jacobs and Wallach (2021) demonstrate how measurement errors in labels can have disparate impacts across demographic groups. Their research shows that when annotations are created through subjective human judgments, systematic biases can enter the data through unconscious annotator preferences or stereotypes. For example, studies have found that human annotators consistently rate identical resumes as less qualified when associated with stereotypically female or non-white names (Bertrand & Mullainathan, 2004).

Comprehensive label quality assessment involves:

- Analysis of potential historical biases in outcome definitions

- Examination of inter-annotator agreement across demographic categories

- Validation of labels against external benchmarks when possible

- Counterfactual analysis that tests whether labels would differ if protected attributes were changed

For the Pre-processing Strategy Selector, label quality assessment will inform whether interventions should focus primarily on features, labels, or both, and may suggest specific approaches like relabeling or label smoothing to address annotation biases.

Fairness Metric Baseline Calculation

Establishing baseline fairness metrics on the raw training data provides essential context for subsequent interventions. This concept is fundamental to AI fairness because it quantifies the initial fairness gaps that interventions must address and establishes the benchmarks against which intervention effectiveness will be measured.

This concept builds on all previous analysis components by translating descriptive findings into specific fairness metrics aligned with normative fairness definitions. It connects directly to intervention selection by establishing which fairness criteria are most violated in the raw data.

Different fairness definitions lead to different metrics (Barocas et al., 2019). Statistical parity examines whether outcomes are independent of protected attributes; equal opportunity focuses on true positive rates across groups; equalized odds considers both true positive and false positive rates. By calculating these metrics on raw training data, you establish a comprehensive fairness baseline that guides intervention priorities.

For a loan approval dataset, baseline calculations might reveal a 12 percentage point disparity in approval rates between demographic groups (violating demographic parity), but similar true positive rates across groups (satisfying equal opportunity). This pattern suggests that interventions should prioritize addressing representation disparities rather than classification errors if demographic parity is the chosen fairness definition.

Comprehensive baseline calculation includes:

- Computing multiple fairness metrics aligned with different fairness definitions

- Calculating confidence intervals to assess statistical significance of disparities

- Examining metrics across demographic intersections

- Identifying which fairness violations are most severe and would most benefit from intervention

For the Pre-processing Strategy Selector, these baseline metrics will serve as quantitative criteria for prioritizing which bias patterns to address first and will establish the evaluation framework for assessing intervention effectiveness.

Domain Modeling Perspective

From a domain modeling perspective, comprehensive data auditing maps to specific components of ML systems:

- Data Collection Documentation: How was data gathered, and what sampling approaches might have introduced biases?

- Feature Distribution Analysis: How do feature distributions vary across protected groups, and which features correlate with protected attributes?

- Label Quality Examination: Do labeling processes or historical decisions embedded in labels create disparities across groups?

- Representation Verification: Are all relevant demographic groups adequately represented in the data, including at intersections?

- Potential Proxy Identification: Which features might serve as proxies for protected attributes, enabling indirect discrimination?

This domain mapping helps you systematically examine potential bias sources throughout the data pipeline rather than focusing on isolated metrics. The auditing methodologies you develop will leverage this mapping to create structured approaches for identifying specific bias patterns that require intervention.

Conceptual Clarification

To clarify these abstract auditing concepts, consider the following analogies:

- Comprehensive data auditing is similar to a thorough medical examination that includes not just basic vitals but also blood tests, imaging, and family history. Just as a doctor needs this complete picture to make an accurate diagnosis before prescribing treatment, data scientists need comprehensive auditing to identify specific bias mechanisms before implementing fairness interventions. A superficial analysis would be like prescribing medication based only on a patient's temperature, potentially addressing symptoms without treating the underlying condition.

- Representation analysis functions like demographic surveys for voting districts. Voter representation analyses examine not just overall population counts but also geographic distribution, age breakdowns, and access barriers. Similarly, data representation analysis examines not just the presence of different groups but their distribution across feature space, outcome categories, and time periods. Just as voter underrepresentation can lead to policies that neglect certain communities, data underrepresentation leads to models that perform poorly for marginalized groups.

- Correlation pattern detection resembles forensic accounting that looks for suspicious patterns in financial transactions. Forensic accountants don't just check if the books balance but look for unusual relationships between accounts, unexpected timing patterns, and indirect connections that might indicate fraud. Similarly, correlation detection examines not just obvious relationships but subtle patterns and indirect associations that might enable discriminatory outcomes through proxy variables.

Intersectionality Consideration

Data auditing must explicitly address intersectionality—how multiple protected attributes interact to create unique patterns of advantage or disadvantage. Traditional auditing approaches often examine protected attributes independently, potentially missing critical disparities at demographic intersections.

As Crenshaw's (1989) foundational work demonstrated, discrimination often operates differently at the intersections of multiple marginalized identities. For instance, discrimination patterns affecting Black women may differ substantively from those affecting either Black men or white women, creating unique vulnerabilities that single-attribute analysis would miss.

In data auditing, this requires explicit examination of:

- Representation across demographic intersections, not just individual protected attributes

- Correlation patterns that may affect specific intersectional groups differently

- Label quality issues that may disproportionately impact certain intersectional categories

- Fairness metrics calculated for specific intersections rather than just aggregated groups

Buolamwini and Gebru's (2018) Gender Shades research provides a powerful example of intersectional auditing. Their analysis revealed that facial recognition systems had error rates of less than 1% for light-skinned males but nearly 35% for dark-skinned females—a disparity that would have been masked by examining either gender or skin tone independently.

For the Pre-processing Strategy Selector, intersectional auditing will ensure that interventions address bias patterns affecting all demographic groups, including those at intersections of multiple marginalized identities, rather than just improving aggregate metrics that might still mask significant disparities.

3. Practical Considerations

Implementation Framework

To conduct comprehensive data auditing for fairness, follow this structured methodology:

-

Initial Data Profiling and Documentation:

-

Document data sources, collection methodologies, and potential selection biases.

- Establish reference populations for demographic comparison (e.g., census data, domain-specific benchmarks).

- Identify protected attributes and potential proxy variables based on domain knowledge.

-

Create comprehensive data dictionaries documenting feature meanings, sources, and known limitations.

-

Multidimensional Representation Analysis:

-

Calculate demographic distributions across protected attributes and their intersections.

- Compare dataset demographics to reference populations to identify representation gaps.

- Analyze representation within outcome categories (e.g., positive vs. negative labels).

-

Visualize demographic distributions using appropriate techniques (e.g., mosaic plots for intersectionality).

-

Correlation and Association Pattern Analysis:

-

Calculate correlation matrices between protected attributes and other features.

- Implement mutual information analysis to capture non-linear relationships.

- Perform conditional independence testing to identify subtle association patterns.

-

Visualize correlation networks highlighting strongest associations with protected attributes.

-

Label Quality Assessment:

-

Analyze historical sources of labels and potential embedded biases.

- Compare label distributions across demographic groups and intersections.

- When possible, validate labels against external benchmarks or human experts.

-

Document any known issues or limitations in label quality.

-

Fairness Metric Baseline Calculation:

-

Implement multiple fairness metrics aligned with relevant fairness definitions.

- Calculate metrics across different demographic groups and intersections.

- Apply statistical significance testing to determine which disparities are meaningful.

- Create visualization dashboards highlighting key fairness gaps.

This methodological framework integrates with standard data science workflows by extending exploratory data analysis to explicitly consider fairness dimensions. While adding analytical complexity, these steps provide the foundation for targeted interventions rather than generic fixes.

Implementation Challenges

When implementing comprehensive data auditing, practitioners commonly face these challenges:

-

Missing or Incomplete Protected Attributes: Many datasets lack explicit protected attributes due to privacy regulations or collection limitations. Address this by:

-

Implementing privacy-preserving techniques that enable auditing without exposing individual identities.

- Using validated proxy methods when necessary, with clear documentation of their limitations.

- Conducting sensitivity analyses to understand how different assumptions about missing attributes affect conclusions.

-

Leveraging external data sources when appropriate to augment demographic information.

-

Balancing Comprehensiveness with Practicality: Thorough auditing can require significant resources, particularly for large datasets. Address this by:

-

Implementing staged approaches that begin with higher-level analysis and progressively add detail where needed.

- Prioritizing analysis based on domain-specific risks and historical patterns of discrimination.

- Automating routine aspects of auditing through reusable code libraries and workflows.

- Developing standardized templates that ensure key dimensions are examined without unnecessary duplication.

Successfully implementing comprehensive data auditing requires resources including statistical expertise to implement appropriate tests, domain knowledge to interpret findings in context, and computational tools capable of analyzing large, high-dimensional datasets efficiently.

Evaluation Approach

To assess whether your data auditing implementation is effective, apply these evaluation strategies:

-

Comprehensiveness Assessment:

-

Verify coverage of all relevant protected attributes and their intersections.

- Confirm examination of multiple potential bias sources (representation, correlation, labels).

- Check for both direct and indirect (proxy) discrimination pathway analysis.

-

Validate that temporal aspects of data have been considered when relevant.

-

Actionability Verification:

-

Ensure findings directly connect to specific pre-processing interventions.

- Verify that quantitative metrics establish clear priorities for intervention.

- Confirm that insights are documented in forms usable by team members with varied backgrounds.

- Check that conclusions would enable informed decisions about which intervention techniques to apply.

These evaluation approaches should be integrated into your organization's data documentation and model development protocols, creating clear connections between auditing findings and subsequent intervention decisions.

4. Case Study: Employment Algorithm Bias Audit

Scenario Context

A large technology company is developing a machine learning algorithm to screen job applications, predicting which candidates are likely to succeed based on resume data, previous employment history, and educational background. Early testing revealed concerning disparities in recommendation rates across demographic groups, prompting a comprehensive data audit before further development.

The dataset contains 50,000 past job applications with features including education level, years of experience, previous roles, skills, and historical hiring decisions. The company has explicitly included gender and race information for audit purposes. Key stakeholders include HR leaders concerned about fair hiring, data scientists developing the algorithm, and compliance officers ensuring regulatory requirements are met.

This scenario presents significant fairness challenges due to the historical underrepresentation of certain groups in tech roles and the potential for perpetuating existing patterns through automated screening. The application domain (employment) also faces specific legal requirements around non-discrimination that make fairness particularly critical.

Problem Analysis

Applying the comprehensive auditing methodology revealed several concerning bias patterns:

- Representation Analysis Issues: Initial demographic analysis showed reasonable overall representation of women (42%) and people of color (35%) in the dataset. However, intersectional analysis revealed severe underrepresentation of women of color in technical roles—they constituted only 4% of the dataset despite forming approximately 18% of the relevant labor market. This underrepresentation was masked when examining either gender or race independently.

-

Correlation Pattern Findings: Correlation analysis identified several problematic associations:

-

Strong correlation (r=0.62) between gender and certain skills (e.g., programming languages typically taught in computer science programs with low female enrollment)

- Significant correlation (r=0.47) between race and prestigious university attendance, potentially reflecting historical educational access disparities

-

Subtle but consistent association between demographic attributes and resume language patterns (more vibrant action words correlated with male candidates)

-

Label Quality Issues: When analyzing the historical hiring decisions used as labels:

-

Male candidates were 37% more likely to be hired than equally qualified female candidates for technical roles

- Candidates from prestigious universities were 45% more likely to be hired than candidates with equivalent qualifications from less well-known institutions

-

Subjective assessments like "cultural fit" showed significant disparities across demographic groups

-

Fairness Metric Baseline Results: Calculating fairness metrics on the raw data revealed:

-

A demographic parity difference of 18 percentage points between male and female candidates

- Equal opportunity disparities of 12 percentage points between white and non-white candidates

- Particularly severe disparities at intersections, with qualified women of color 28 percentage points less likely to be recommended than qualified white men

These findings demonstrated that multiple bias mechanisms were present in the data, requiring a combination of pre-processing interventions rather than a single approach.

Solution Implementation

Based on the audit findings, the team implemented a comprehensive approach:

-

For Representation Analysis Issues, they:

-

Documented specific demographic gaps, particularly at intersections of gender and race

- Created visualizations showing representation across job categories and qualifications

- Developed reference demographic profiles showing what balanced representation would look like

-

Identified high-risk demographic groups that would require special attention during intervention

-

For Correlation Pattern Findings, they:

-

Generated correlation maps showing how protected attributes related to other features

- Calculated mutual information scores to identify non-linear relationships

- Created feature groups based on their association with protected attributes

-

Developed a ranking of features by their potential for proxy discrimination

-

For Label Quality Issues, they:

-

Documented patterns of historical discrimination in hiring decisions

- Analyzed how subjective assessments differed across demographic groups

- Identified which hiring managers showed the most consistent bias patterns

-

Created adjusted labels that attempted to correct for historical discrimination

-

For Fairness Metric Baselines, they:

-

Implemented dashboards showing different fairness metrics across demographic groups

- Calculated confidence intervals to determine statistical significance of disparities

- Ranked fairness violations by severity to prioritize intervention efforts

- Established specific fairness goals based on legal requirements and company values

Throughout implementation, they maintained explicit focus on intersectionality, ensuring that groups at the intersection of multiple marginalizedidentities received particular attention.

Outcomes and Lessons

The comprehensive audit revealed several insights that would have been missed with less thorough analysis:

- The intersectional representation gaps were masked when examining either gender or race independently, demonstrating the importance of multidimensional analysis.

- Subtle linguistic patterns in resume descriptions functioned as unexpected proxies for gender, highlighting the need for association analysis beyond obvious correlations.

- The most severe fairness violations occurred at demographic intersections, confirming the critical importance of intersectional analysis.

- Different bias mechanisms required different interventions—representation gaps suggested reweighting approaches while proxy discrimination indicated a need for distribution transformation techniques.

Key challenges included communicating technical findings to non-technical stakeholders and balancing comprehensive analysis with practical time constraints.

The most generalizable lessons included:

- The importance of examining multiple bias mechanisms rather than assuming a single source of unfairness

- The value of quantifying bias to establish clear intervention priorities

- The necessity of intersectional analysis to prevent masking serious disparities affecting specific subgroups

These insights directly inform the development of the Pre-processing Strategy Selector, demonstrating how different audit findings point toward different intervention strategies.

5. Frequently Asked Questions

FAQ 1: Protected Attribute Limitations

Q: How can I conduct meaningful bias audits when my dataset lacks protected attributes like race or gender due to privacy regulations or collection limitations?

A: Privacy constraints require creative approaches, but meaningful auditing remains possible through several strategies. First, consider aggregate analysis using anonymized, consented demographic data that preserves individual privacy while enabling group-level analysis. Second, implement privacy-preserving computation techniques like differential privacy or secure multi-party computation that enable protected attribute analysis without exposing individual data. Third, when appropriate, use validated proxy methods—geographic analysis using census data or validated name-based inference—with explicit documentation of their limitations and uncertainty quantification. Fourth, conduct sensitivity analysis that explores how different assumptions about missing demographic distributions might affect your conclusions. Finally, consider collecting supplementary demographic data through anonymous, voluntary surveys specifically for audit purposes. The goal isn't perfect demographic knowledge but rather sufficient understanding to identify potential disparities and inform intervention strategies. Document all methods, limitations, and uncertainty to maintain transparency about what your audit can and cannot conclude with confidence.

FAQ 2: Balancing Depth With Practicality

Q: Given limited time and resources, how do I determine which aspects of data auditing to prioritize without missing critical bias patterns?

A: Effective prioritization requires a risk-based approach focused on your specific context. Start by leveraging historical knowledge—research documented discrimination patterns in your domain, as these suggest where to look first. For instance, prioritize gender analysis in hiring data or race analysis in lending data based on well-established historical biases. Next, conduct rapid preliminary scans of key fairness metrics across multiple dimensions, using the results to guide deeper investigation where disparities appear largest. Focus on outcome disparities first—if certain groups receive systematically different predictions, this warrants immediate investigation regardless of cause. Prioritize intersectional analysis for historically marginalized groups, as they often face unique bias patterns that simple demographic breakdowns miss. Additionally, leverage automation through reusable code libraries and templates to make comprehensive auditing more efficient. Finally, implement iterative approaches that start with critical areas and progressively expand coverage as resources permit. Remember that imperfect but systematic auditing is vastly preferable to no auditing at all, provided you clearly document which dimensions have been examined and which remain for future work.

6. Project Component Development

Component Description

In Unit 5, you will develop the data auditing component of the Pre-processing Strategy Selector. This component will provide a structured methodology for identifying specific bias patterns in training data and connecting these patterns to appropriate pre-processing interventions.

The deliverable will take the form of a comprehensive auditing framework with analytical templates, visualization approaches, metric calculations, and interpretation guidelines that systematically uncover different types of bias and guide intervention selection.

Development Steps

- Create a Multidimensional Representation Analysis Template: Develop structured approaches for examining demographic distributions across protected attributes and their intersections, comparing these to reference populations, and identifying representation gaps that might require reweighting or resampling interventions.

- Build a Correlation Pattern Detection Framework: Design analytical techniques for identifying problematic associations between protected attributes and other features, with special attention to potential proxy variables that might enable indirect discrimination and require transformation interventions.

- Develop a Label Quality Assessment Approach: Create methodologies for evaluating potential biases in training labels, including historical discrimination embedded in decision processes and annotator biases that might necessitate relabeling or label smoothing techniques.

Integration Approach

This auditing component will interface with other parts of the Pre-processing Strategy Selector by:

- Providing the diagnostic inputs that guide intervention selection, connecting specific bias patterns to appropriate techniques.

- Establishing evaluation metrics that can be used to assess intervention effectiveness.

- Creating visualization approaches that help communicate findings to both technical and non-technical stakeholders.

To enable successful integration, develop standardized documentation formats for auditing findings, use consistent terminology across components, and create explicit connections between identified bias patterns and specific intervention techniques covered in subsequent Units.

7. Summary and Next Steps

Key Takeaways

This Unit has established the fundamental importance of comprehensive data auditing as the diagnostic foundation for all fairness interventions. Key insights include:

- Multidimensional representation analysis must go beyond simple demographic breakdowns to examine intersectionality and representation within outcome categories, revealing disparities that might be masked by aggregate statistics.

- Correlation pattern detection systematically identifies both direct associations and subtle relationships that might enable proxy discrimination, even when protected attributes are explicitly excluded from models.

- Label quality assessment examines how historical discrimination or annotator biases might be embedded in training labels, necessitating intervention at the label level rather than just in features.

- Fairness metric baseline calculation establishes quantitative measures of bias that guide intervention priorities and provide benchmarks for assessing improvement.

These concepts directly address our guiding questions by providing systematic frameworks for uncovering bias patterns in training data and distinguishing between different bias mechanisms that require different intervention approaches.

Application Guidance

To apply these concepts in your practical work:

- Start by thoroughly documenting your data sources, collection methodologies, and potential selection biases before beginning quantitative analysis.

- Implement staged auditing that progressively adds detail—begin with basic demographic breakdowns, then add intersectional analysis, correlation detection, and label quality assessment.

- Use multiple visualization techniques to communicate findings effectively, particularly for complex intersectional patterns that may be difficult to capture in tables or summary statistics.

- Establish clear connections between audit findings and potential interventions, beginning to formulate hypotheses about which techniques might be most effective.

For organizations new to fairness auditing, start by implementing the most critical components (representation analysis and basic correlation detection) while building capacity for more sophisticated approaches. Even basic auditing is substantially better than none, provided its limitations are clearly documented.

Looking Ahead

In the next Unit, we will build on this auditing foundation by exploring reweighting and resampling techniques for addressing representation disparities. You will learn how these approaches can effectively counteract the representation gaps identified through comprehensive auditing without modifying feature distributions.

The auditing methodologies you've developed in this Unit will directly inform which reweighting approaches are most appropriate for specific bias patterns. By understanding exactly how representation imbalances manifest in your data, you'll be able to design targeted reweighting strategies rather than applying generic techniques that might not address the specific disparities present.

References

Angwin, J., Larson, J., Mattu, S., & Kirchner, L. (2016). Machine bias. ProPublica. Retrieved from https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing

Barocas, S., Hardt, M., & Narayanan, A. (2019). Fairness and machine learning: Limitations and opportunities. Retrieved from https://fairmlbook.org/

Bertrand, M., & Mullainathan, S. (2004). Are Emily and Greg more employable than Lakisha and Jamal? A field experiment on labor market discrimination. American Economic Review, 94(4), 991-1013.

Buolamwini, J., & Gebru, T. (2018). Gender shades: Intersectional accuracy disparities in commercial gender classification. In Proceedings of the 1st Conference on Fairness, Accountability, and Transparency (pp. 77-91).

Crenshaw, K. (1989). Demarginalizing the intersection of race and sex: A black feminist critique of antidiscrimination doctrine, feminist theory, and antiracist politics. University of Chicago Legal Forum, 1989(1), 139-167.

Gebru, T., Morgenstern, J., Vecchione, B., Vaughan, J. W., Wallach, H., Daumé III, H., & Crawford, K. (2021). Datasheets for datasets. Communications of the ACM, 64(12), 86-92.

Jacobs, A. Z., & Wallach, H. (2021). Measurement and fairness. In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency (pp. 375-385).

Unit 2

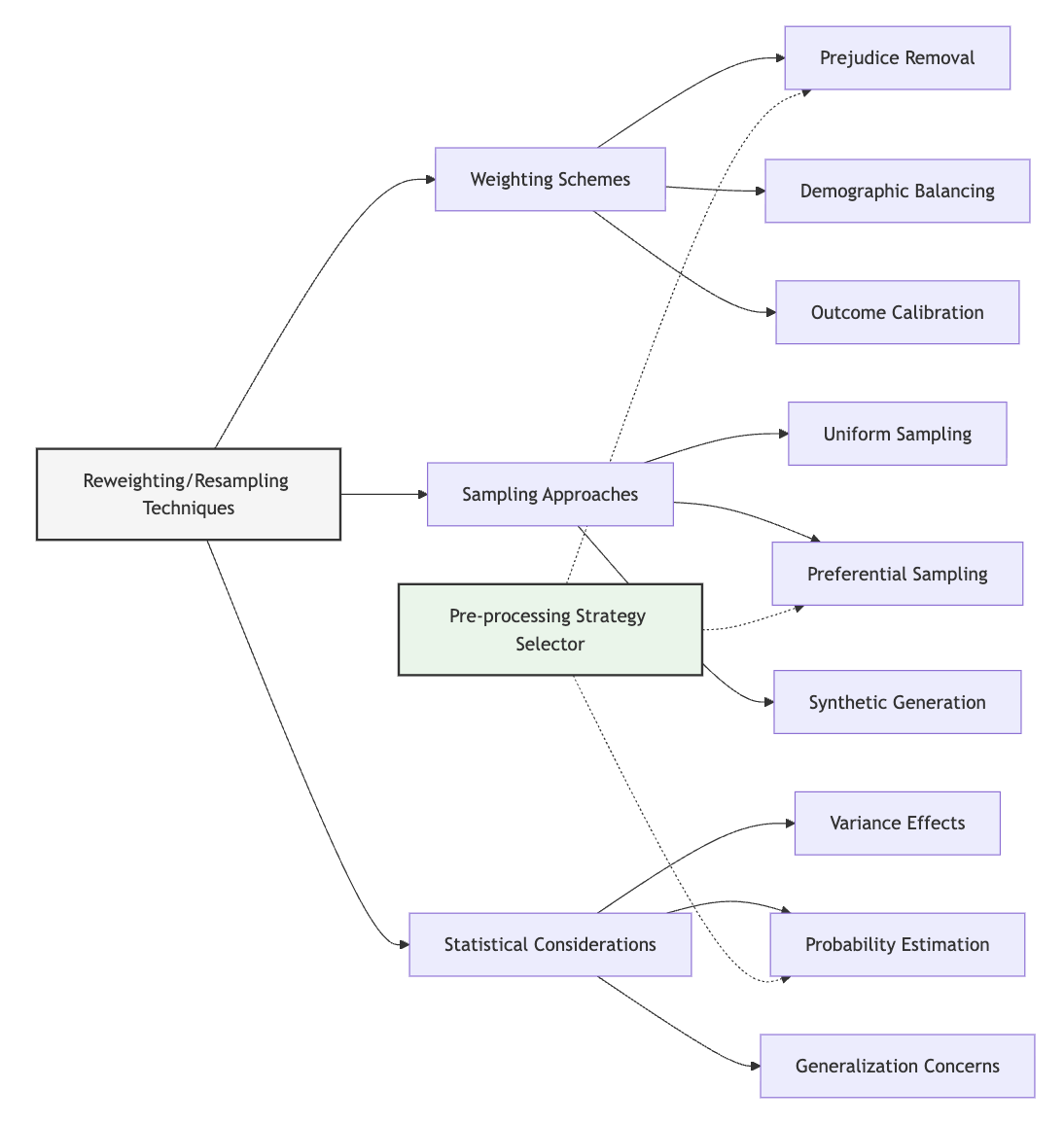

Unit 2: Reweighting and Resampling Techniques

1. Conceptual Foundation and Relevance

Guiding Questions

- Question 1: How can we systematically adjust the influence of training instances to mitigate bias without modifying feature values or model architectures?

- Question 2: When are reweighting and resampling approaches more appropriate than other fairness interventions, and how do we implement them while preserving statistical validity?

Conceptual Context

Reweighting and resampling techniques represent foundational data-level interventions that address fairness by modifying the influence of training instances rather than transforming their features. This approach is particularly valuable because it preserves the original feature distributions within groups while adjusting how different instances impact model training.

Consider a common fairness challenge: a loan approval dataset contains far fewer examples of approved loans for applicants from minority neighborhoods due to historical redlining practices. Even with protected attributes removed, this representation disparity can lead models to perpetuate discriminatory patterns. Reweighting and resampling techniques directly address this imbalance by giving appropriate influence to underrepresented groups, creating a more equitable foundation for model training.

The significance of these techniques lies in their versatility and relative simplicity. Unlike more complex interventions that modify feature distributions or algorithm internals, reweighting and resampling can often be implemented as preprocessing steps that integrate seamlessly with existing ML workflows. As Kamiran and Calders (2012) demonstrated, carefully designed instance weighting schemes can significantly reduce discrimination while maintaining predictive performance.

This Unit builds directly on the comprehensive data auditing approaches established in Unit 1, translating identified bias patterns into specific reweighting and resampling strategies. It also lays essential groundwork for the more complex distribution transformation techniques you'll explore in Unit 3, providing complementary approaches that address different types of bias. Together, these techniques will form critical options in the Pre-processing Strategy Selector you'll develop in Unit 5, enabling you to select appropriate data-level interventions based on specific fairness challenges.

2. Key Concepts

Instance Influence in Model Training

The concept of instance influence—how strongly each training example impacts model learning—is fundamental to understanding reweighting and resampling techniques. This concept is crucial for AI fairness because it provides a mechanism for addressing representation disparities without modifying the inherent characteristics of the data.

Standard training procedures typically give equal importance to each instance (in unweighted approaches) or use weights based on factors unrelated to fairness (such as importance sampling for computational efficiency). This can lead models to minimize average error, which inherently prioritizes patterns common in majority groups while potentially overlooking patterns specific to underrepresented groups.

This concept directly connects to fairness by enabling the adjustment of instance influence based on protected attributes and outcomes. By strategically modifying how strongly different examples impact model training, reweighting and resampling can counteract historical imbalances and ensure all demographic groups appropriately influence the resulting model.

Kamiran and Calders (2012) formalized this approach in their seminal work on preprocessing techniques for fairness. They demonstrated that by assigning weights inversely proportional to the representation of specific attribute-outcome combinations, models could achieve significantly improved fairness metrics while maintaining reasonable predictive performance (Kamiran & Calders, 2012).

For example, in a hiring algorithm trained on historical data, qualified applicants from underrepresented groups might receive higher weights to counteract their historical underrepresentation, ensuring these patterns receive appropriate consideration during model training.

For the Pre-processing Strategy Selector we'll develop in Unit 5, understanding instance influence is essential because it establishes the fundamental mechanism through which reweighting and resampling techniques operate. This understanding will guide the development of selection criteria that determine when these approaches are most appropriate based on specific bias patterns and fairness objectives.

Reweighting Approaches for Fairness

Reweighting approaches explicitly assign different importance weights to training instances based on their characteristics, directly modifying how strongly each example influences model learning without changing the dataset size or composition. This technique is essential for AI fairness because it enables fine-grained control over the influence of different demographic groups and outcome combinations.

Reweighting builds on the concept of instance influence by providing specific methodologies for determining appropriate weight values. Rather than treating all instances equally, reweighting incorporates fairness considerations into the weight assignment process, ensuring traditionally underrepresented groups have appropriate impact on model training.

Several reweighting schemes have been developed specifically for fairness. Kamiran and Calders (2012) introduced a prejudice remover approach that assigns weights based on the combination of protected attributes and outcomes, giving higher weights to combinations that contradict discriminatory patterns. Building on this work, Calders and Verwer (2010) proposed techniques that assign weights to create conditional independence between protected attributes and outcomes.

A practical example comes from loan approval: in historical data, minority applicants might have disproportionately high rejection rates due to discriminatory practices rather than creditworthiness. Reweighting can assign higher importance to minority approvals and majority rejections that contradict these biased patterns, enabling the model to learn more fair decision boundaries.

For the Pre-processing Strategy Selector, understanding different reweighting approaches is crucial for matching specific techniques to appropriate bias patterns. The selector will need to incorporate guidance on which reweighting methods are most suitable for different fairness definitions, data distributions, and implementation constraints.

Resampling Strategies for Balanced Learning

Resampling strategies modify the training data by selectively including or duplicating instances to create a more balanced dataset for model training. This concept is vital for AI fairness because it addresses representation disparities through direct modification of the training set composition rather than through mathematical weighting during optimization.

While reweighting adjusts instance influence through mathematical weights, resampling physically alters which examples the model sees during training and how often. This difference makes resampling particularly valuable when working with algorithms or frameworks that don't support instance weights or when the implementation must occur entirely in the data preparation pipeline.

Chawla et al. (2002) developed the Synthetic Minority Over-sampling Technique (SMOTE) to address class imbalance by generating synthetic examples for minority classes. While not originally designed for fairness, this technique has been adapted to address demographic representation disparities. Feldman et al. (2015) extended these concepts with fairness-specific sampling approaches that selectively include examples to reduce disparate impact.

For example, in a medical diagnosis algorithm where data from certain demographic groups is limited, selective oversampling of these groups can ensure the model doesn't simply optimize for majority populations while underserving minorities. Various approaches include:

- Uniform sampling that selects the same number of examples from each group

- Preferential sampling that selectively includes examples that reduce bias

- Synthetic techniques that generate new examples for underrepresented groups

For our Pre-processing Strategy Selector, understanding resampling strategies will be essential for determining when they should be preferred over reweighting approaches. The selector will need to incorporate decision criteria based on algorithm compatibility, implementation constraints, and specific bias patterns identified through auditing.

Statistical Considerations and Validity

Modifying instance influence through reweighting or resampling introduces important statistical considerations that must be addressed to ensure interventions improve fairness without compromising model validity. This concept is critical for AI fairness because naive application of these techniques can lead to problems like overfitting, increased variance, or distorted probability estimates.

This concept interacts with reweighting and resampling approaches by highlighting the potential trade-offs and complications they introduce. While these techniques can effectively reduce bias, they alter the statistical properties of the training process in ways that require careful consideration and potential compensation.

Key statistical challenges include:

- Sample variance effects: Reweighting effectively reduces the sample size, potentially increasing model variance

- Estimation bias: Modified sampling distributions can distort probability estimates

- Generalization concerns: Extreme weights or sampling rates may lead to overfitting specific patterns

Zadrozny et al. (2003) studied how reweighting affects model calibration and proposed techniques to preserve proper probability estimation when using weighted samples. More recently, Iosifidis and Ntoutsi (2018) examined the interaction between fairness-oriented resampling and model generalization, proposing approaches that balance fairness improvements with statistical validity.

For example, in a credit scoring model, aggressive oversampling of minority approvals might improve demographic parity but could also lead to poor calibration where predicted probabilities no longer accurately reflect true risks. Mitigation approaches include regularization, cross-validation procedures specifically designed for weighted data, and combining reweighting with other techniques.

For our Pre-processing Strategy Selector, these statistical considerations will be crucial for guiding appropriate intervention configuration. The selector will need to incorporate guidelines for balancing fairness improvements against statistical concerns, potentially suggesting complementary techniques that address both simultaneously.

Domain Modeling Perspective

From a domain modeling perspective, reweighting and resampling techniques map to specific components of ML systems:

- Data Preparation Pipeline: Resampling physically modifies the dataset composition through selective inclusion and duplication

- Training Process: Reweighting modifies the optimization objective by adjusting the influence of different instances

- Model Evaluation: Weighted validation ensures fairness improvements don't come at the cost of essential performance characteristics

- Deployment Monitoring: Weight drift detection prevents gradual deviation from the intended balance

This domain mapping helps you understand how reweighting and resampling integrate with the ML workflow rather than viewing them as isolated techniques. The Pre-processing Strategy Selector will leverage this mapping to guide appropriate intervention selection based on where in the pipeline modifications can most effectively be implemented.

Conceptual Clarification

To clarify these abstract concepts, consider the following analogies:

- Reweighting for fairness functions like weighted voting in a diverse committee. In traditional voting, each person gets one equal vote, which means the largest group always determines the outcome. Weighted voting gives stronger representation to minority perspectives by assigning different voting powers based on representation—not because individual minority votes are inherently more valuable, but to ensure all perspectives appropriately influence the final decision. Similarly, reweighting in machine learning doesn't change what examples the model sees but adjusts how strongly different examples influence the final model, ensuring underrepresented groups maintain appropriate impact on model decisions.

- Resampling for fairness is similar to curating a reading list for a comprehensive education. Rather than randomly selecting books in proportion to what's available (which would overwhelmingly represent majority perspectives), an educator might intentionally include more works from underrepresented authors to ensure diverse viewpoints. The goal isn't to misrepresent the overall publishing landscape but to create a more balanced educational experience. Similarly, fairness-aware resampling doesn't pretend the world lacks imbalances but creates a more balanced learning environment for the model that prevents it from overlooking important patterns in underrepresented groups.

- Statistical validity considerations in reweighting resemble adjusting for uneven jury selection. When assessing whether jury selection was fair, statisticians must account for both the demographic composition and the appropriate statistical uncertainty—weighted analyses require larger margins of error. Similarly, when using reweighting for fairness, we must adapt our statistical approaches to account for the modified influence distribution, ensuring our fairness improvements come with appropriate statistical guarantees rather than introducing new forms of uncertainty.

Intersectionality Consideration

Reweighting and resampling approaches must explicitly address how multiple protected attributes interact to create unique representation challenges at demographic intersections. Traditional approaches often address protected attributes independently, potentially missing critical disparities at intersections of multiple attributes.

For example, gender bias and racial bias in hiring data might each appear modest when examined separately, but their intersection could reveal severe underrepresentation of women of color. Standard reweighting approaches addressing gender and race independently might fail to give appropriate influence to this specific intersection.

Ghosh et al. (2021) demonstrated that intersectional fairness requires specialized weighting schemes that explicitly account for multiple attribute combinations rather than addressing each attribute in isolation. Their research showed that addressing attributes separately can sometimes improve fairness along individual dimensions while exacerbating disparities at specific intersections.

Implementing intersectional reweighting and resampling requires:

- Explicitly considering all relevant attribute combinations during weight assignment

- Ensuring sufficient representation of intersectional groups through stratified sampling

- Recognizing statistical challenges when working with smaller intersectional subgroups

- Developing appropriate evaluation metrics that assess fairness across intersections

The Pre-processing Strategy Selector must incorporate these intersectional considerations explicitly, guiding users toward reweighting and resampling configurations that address potential fairness issues across multiple, overlapping demographic dimensions rather than treating each protected attribute in isolation.

3. Practical Considerations

Implementation Framework

To implement effective reweighting and resampling for fairness, follow this structured methodology:

-

Weight Determination Strategy:

-

For addressing representation disparities: Calculate weights inversely proportional to demographic group representation to ensure balanced influence.

- For countering outcome disparities: Assign weights based on the protected attribute-outcome combinations, giving higher weight to combinations that contradict biased patterns (e.g., minority approvals in a system with historical bias toward rejection).

- For addressing conditional outcome disparities: Implement weights that equalize outcomes across groups within similar feature value ranges.

-

Document weight calculation formulas and justifications for specific approaches.

-

Implementation Approaches:

-

For models supporting instance weights: Directly incorporate calculated weights in the training process through the model's built-in weighting parameters.

- For frameworks without native weight support: Convert weights to sampling probabilities and implement weighted sampling during mini-batch creation.

- For preprocessing-only pipelines: Transform weights into discrete resampling by sampling instances with probability proportional to their weights.

-

Document implementation details and code patterns for replication.

-

Statistical Validity Preservation:

-

Implement cross-validation procedures designed for weighted data to ensure robust evaluation.

- Consider regularization adjustments to compensate for effective sample size reduction from weighting.

- Monitor calibration metrics to ensure probability estimates remain accurate despite modified training distribution.

- Document statistical adjustments and their effects on both fairness and performance metrics.

These methodologies integrate with standard ML workflows by providing specific implementation patterns for different stages of the pipeline. Most modern ML frameworks support instance weights during training, making reweighting relatively straightforward to implement. Resampling can be implemented during data preparation, making it compatible with any modeling approach.

Implementation Challenges

When implementing reweighting and resampling for fairness, practitioners commonly face these challenges:

-

Balancing Fairness and Performance: Aggressive reweighting to address severe disparities can sometimes degrade overall model performance. Address this by:

-

Implementing progressive weighting approaches that start with moderate adjustments and increase gradually while monitoring performance impacts.

- Exploring hybrid approaches that combine reweighting with complementary fairness techniques.

- Developing explicit trade-off frameworks that quantify fairness improvements against performance impacts.

-

Using regularization techniques specifically designed for weighted training to mitigate overfitting to heavily weighted samples.

-

Handling Multiple Protected Attributes: Addressing intersectional fairness through reweighting becomes complicated with multiple protected attributes creating numerous intersectional groups. Address this by:

-

Implementing hierarchical weighting schemes that first balance major groups, then address intersectional disparities.

- Using smoothing techniques for small intersectional groups to avoid extreme weights.

- Employing dimensionality reduction approaches that identify the most critical intersections requiring attention.

- Documenting intersectional performance explicitly to ensure improvements in aggregate metrics don't mask issues at specific intersections.

Successfully implementing reweighting and resampling requires resources including statistical expertise to verify validity, computational resources for experimenting with different weighting schemes, and organizational willingness to accept potential trade-offs between different performance dimensions.

Evaluation Approach

To assess whether your reweighting or resampling intervention is effective, implement these evaluation strategies:

-

Fairness Impact Assessment:

-

Compare fairness metrics before and after intervention across multiple definitions (demographic parity, equal opportunity, etc.).

- Measure improvements in the specific fairness criteria targeted by the intervention.

- Evaluate fairness across intersectional categories, not just in aggregate.

-

Document both relative and absolute improvements in fairness metrics.

-

Performance Impact Analysis:

-

Assess changes in overall predictive performance (accuracy, F1, AUC) using appropriate cross-validation for weighted data.

- Measure performance specifically for previously disadvantaged groups to ensure improvements.

- Evaluate model calibration to ensure probability estimates remain reliable.

-

Document performance changes across different subgroups and intersections.

-

Stability and Robustness Testing:

-

Verify fairness improvements persist across different random splits of the data.

- Test sensitivity to variations in the weighting scheme parameters.

- Assess how the intervention performs with new data or shifting distributions.

- Document robustness findings and potential limitations.

These evaluation approaches should be integrated with your organization's broader model assessment framework, providing a comprehensive view of how reweighting or resampling affects both fairness and other essential performance characteristics.

4. Case Study: Medical Diagnosis Algorithm

Scenario Context

A healthcare organization is developing a diagnostic algorithm to detect early signs of cardiovascular disease from patient data. The algorithm analyzes lab results, vital signs, medical history, demographic information, and lifestyle factors to predict disease risk, helping physicians prioritize preventive care for high-risk patients.

Initial analysis revealed concerning performance disparities: the algorithm showed significantly lower sensitivity (true positive rate) for female patients compared to male patients, potentially leading to delayed diagnosis and treatment for women. Further investigation indicated this disparity stemmed from historical underdiagnosis of cardiovascular disease in women in the training data, as heart disease has traditionally been considered primarily a "male disease" despite being the leading cause of death for both men and women.

This scenario involves multiple stakeholders with different priorities: clinicians seeking accurate diagnostic support, hospital administrators concerned about efficiency and liability, patients requiring equitable care, and regulatory bodies monitoring healthcare AI for fairness. The fairness implications are significant given the life-or-death nature of medical decisions and the healthcare system's ethical obligation to provide equitable care.

Problem Analysis

Applying reweighting and resampling concepts to this scenario reveals several key insights:

- Representation Analysis: The training data reflects historical patterns where female cardiovascular disease was frequently underdiagnosed. This isn't merely a representation disparity in quantity (there may be sufficient female patients in the dataset) but rather a quality issue—female patients with early disease signs were more likely to be labeled as healthy in the historical data.

- Instance Influence Assessment: The standard equal-weight training approach would naturally optimize for patterns most common in the data, essentially learning to replicate the historical underdiagnosis of female patients rather than identifying true risk patterns across genders.

- Statistical Considerations: Simply removing gender from the feature set wouldn't solve the problem because the bias appears in the outcome labels themselves. Furthermore, many symptoms and risk factors present differently across genders, making gender-specific patterns genuinely important for accurate diagnosis.

The core challenge is creating a model that learns appropriate risk patterns for both male and female patients despite the historical labeling bias in the training data. This requires modifying instance influence to ensure female patients with disease appropriately impact model training.

From an intersectional perspective, the analysis becomes even more nuanced. The audit revealed that the sensitivity disparity was particularly pronounced for women of color and older women, suggesting unique challenges at these intersections that wouldn't be addressed by a gender-only intervention.

Solution Implementation

To address these fairness concerns through reweighting and resampling, the team implemented a multi-faceted approach:

-

Outcome-Aware Reweighting: The team implemented a reweighting scheme that assigned higher weights to:

-

Female patients with confirmed cardiovascular disease (to increase their influence on the model)

- Male patients without cardiovascular disease (to balance the distribution)

This approach directly addressed the historical underdiagnosis bias by giving appropriate influence to female cardiovascular cases during model training. The weighting formula used:

w(gender, outcome) = baseline_weight * (target_ratio / observed_ratio)

Where target_ratio represented a more balanced distribution based on epidemiological data rather than historical diagnosis rates.

- Intersectional Stratification: Recognizing the intersectional nature of the bias, the team implemented stratified resampling that explicitly addressed representations at intersections of gender, age, and race. This ensured sufficient representation of previously underrepresented intersectional groups.

-

Statistical Validity Preservation: To maintain statistical rigor while implementing these adjustments, the team:

-

Used modified cross-validation procedures appropriate for weighted data

- Implemented regularization techniques to prevent overfitting to heavily weighted samples

-

Monitored calibration metrics to ensure predicted probabilities remained accurate

-

Evaluation Framework: The intervention was evaluated through:

-

Gender-specific sensitivity and specificity metrics

- Intersectional performance analysis across age, gender, and race groups

- Clinical validation by cardiologists reviewing model predictions

This implementation balanced fairness improvements with clinical validity, ensuring the model would provide more equitable risk assessment while maintaining high overall diagnostic accuracy.

Outcomes and Lessons

The reweighting and resampling intervention produced several important outcomes:

- The gender disparity in sensitivity decreased by 68% while maintaining overall predictive performance.

- Intersectional analysis showed improvements across most demographic intersections, though some disparities persisted for specific subgroups.

- Clinical review confirmed the adjusted model made more appropriate risk assessments for female patients presenting with symptoms that had historically been underdiagnosed.

Key challenges included:

- Finding the optimal weighting balance that improved fairness without compromising overall performance

- Addressing sparse data at some demographic intersections

- Ensuring model calibration remained accurate despite the modified training distribution

The most generalizable lessons included:

- The importance of outcome-aware reweighting when bias exists in historical labels rather than merely in representation quantity.

- The value of combining reweighting with domain expertise to establish appropriate target distributions rather than simply enforcing statistical parity.

- The need for comprehensive evaluation across multiple fairness criteria and performance metrics to understand intervention effects fully.

These insights directly inform the Pre-processing Strategy Selector, demonstrating when reweighting approaches are particularly valuable (label bias cases) and how they should be configured (based on epidemiologically sound target distributions rather than simple statistical parity).

5. Frequently Asked Questions

FAQ 1: Reweighting Vs. Resampling Selection

Q: When should I choose reweighting over resampling or vice versa for addressing fairness issues?

A: The choice between reweighting and resampling depends on several practical factors. Choose reweighting when: (1) your modeling framework directly supports instance weights during training, enabling precise influence adjustments without physically altering the dataset; (2) you need fine-grained, continuous control over instance influence rather than discrete inclusion/exclusion; or (3) memory and storage constraints make duplicating data points impractical. Prefer resampling when: (1) working with frameworks or algorithms that don't natively support instance weights; (2) implementing fairness as a preprocessing step separate from model training; or (3) other team members need to work with a concrete, balanced dataset rather than understanding weighting schemes. In practice, reweighting offers more precise control but requires model compatibility, while resampling offers broader compatibility but with less granular control. For high-stakes applications, consider implementing both approaches in parallel during development to determine which provides better fairness improvements in your specific context.

FAQ 2: Weighting Scheme Design

Q: How do I determine the optimal weighting scheme for my specific fairness objectives without compromising model performance?

A: Designing effective weighting schemes requires balancing fairness goals with statistical considerations. Start by clearly defining your primary fairness objective—whether demographic parity, equal opportunity, or another metric—as this will guide your weighting approach. For representation disparities, weights inversely proportional to group size provide a starting point. For outcome disparities, weights should be based on protected attribute-outcome combinations, giving higher influence to combinations that contradict bias patterns. However, avoid extreme weights that might lead to overfitting or instability. Implement progressive weighting: begin with moderate adjustments (e.g., square root of the theoretical optimal weights) and increase gradually while monitoring both fairness improvements and performance metrics on a validation set. Use cross-validation specifically designed for weighted data to ensure reliable evaluation. Consider combining weighting with regularization techniques that mitigate potential overfitting to heavily weighted samples. Document multiple weighting schemes and their effects on both fairness and performance metrics, enabling explicit trade-off decisions based on your application's specific requirements and constraints.

6. Project Component Development

Component Description

In Unit 5, you will develop the reweighting and resampling section of the Pre-processing Strategy Selector. This component will provide systematic guidance for selecting and configuring appropriate reweighting and resampling techniques based on specific bias patterns, data characteristics, and fairness objectives.

The deliverable will include a decision framework for determining when reweighting and resampling are appropriate, comparisons of different techniques with their strengths and limitations, configuration guidelines for implementation, and evaluation approaches for assessing effectiveness. These elements will form a critical part of the overall Pre-processing Strategy Selector, enabling practitioners to make informed decisions about when and how to implement these techniques.

Development Steps

- Create a Technique Comparison Matrix: Develop a comprehensive comparison of different reweighting and resampling approaches, including their methodological foundations, implementation requirements, appropriate use cases, and limitations. This matrix will serve as a reference for matching specific techniques to appropriate contexts.

- Design Selection Decision Trees: Create structured decision flows that guide practitioners from identified bias patterns toward appropriate reweighting or resampling techniques. These decision trees should incorporate factors like bias type, data characteristics, model compatibility, and implementation constraints.

- Develop Configuration Guidelines: Build detailed guidelines for implementing and tuning selected techniques, including formulas for weight calculation, sampling approaches, parameter selection guidance, and code patterns for common frameworks. These guidelines should enable effective implementation once a technique has been selected.

Integration Approach

This reweighting and resampling component will interface with other parts of the Pre-processing Strategy Selector by:

- Building on the bias patterns identified through the auditing approaches from Unit 1.

- Establishing clear boundaries with the transformation techniques from Unit 3, indicating when reweighting/resampling should be preferred over or combined with feature transformations.

- Providing integration patterns for the data generation approaches from Unit 4, showing how synthetic data can complement reweighting/resampling.

To enable successful integration, use consistent terminology across selector components, establish clear decision criteria that distinguish when each approach is most appropriate, and create explicit guidance for combining techniques when necessary.

7. Summary and Next Steps

Key Takeaways

Throughout this Unit, you've explored how reweighting and resampling techniques provide powerful tools for addressing fairness through data-level interventions. Key insights include:

- Instance influence is a fundamental lever for fairness that enables addressing representation disparities without modifying feature distributions. By adjusting how strongly different examples impact model training, these techniques can counteract historical imbalances that would otherwise be perpetuated by standard learning approaches.

- Different reweighting schemes target different bias patterns, from simple demographic balancing to more sophisticated approaches that consider protected attribute-outcome combinations. Understanding these distinctions helps match specific techniques to appropriate fairness challenges.

- Implementation approaches span the ML pipeline, from preprocessing through resampling to direct weight incorporation during training. This flexibility enables integration with diverse frameworks and workflows while addressing fairness at the data level.

- Statistical validity requires careful consideration to ensure fairness improvements don't come at the cost of model robustness. Techniques like specialized cross-validation, regularization adjustments, and calibration monitoring help maintain statistical rigor while implementing fairness interventions.

These concepts address our guiding questions by showing how instance influence can be systematically adjusted to improve fairness without modifying features or model architectures, and by establishing when reweighting and resampling are most appropriate compared to other fairness interventions.

Application Guidance

To apply these concepts in your practical work:

- Start with comprehensive data auditing to identify specific bias patterns, as described in Unit 1. Understanding the exact nature of representation and outcome disparities is essential for selecting appropriate reweighting or resampling approaches.

- When representation disparities are the primary concern, implement group-based weighting inversely proportional to group size or sample according to target distributions that better represent the population of interest.

- When outcome disparities are the key issue, implement more sophisticated schemes that consider protected attribute-outcome combinations, giving higher weight to combinations that contradict biased patterns.

- Always evaluate interventions across multiple metrics—both fairness and performance—to understand the full impact of your adjustments and potential trade-offs.

For organizations new to fairness interventions, reweighting and resampling often represent accessible starting points that can integrate with existing workflows without requiring fundamental architectural changes. Begin with moderate adjustments and increase intervention strength incrementally while monitoring both fairness improvements and potential performance impacts.

Looking Ahead

In the next Unit, we will build on these foundations by exploring distribution transformation approaches that directly modify feature representations to reduce problematic correlations. While reweighting and resampling adjust instance influence without changing features, transformation techniques provide complementary approaches that address bias at the feature level.

The understanding you've developed about reweighting and resampling will provide important context for these transformation techniques, as both approaches represent different strategies for data-level fairness interventions. Your knowledge of when and how to implement reweighting and resampling will help you understand where transformation approaches might provide better alternatives or valuable complements in your fairness toolkit.

References

Calders, T., & Verwer, S. (2010). Three naive Bayes approaches for discrimination-free classification. Data Mining and Knowledge Discovery, 21(2), 277-292.

Chawla, N. V., Bowyer, K. W., Hall, L. O., & Kegelmeyer, W. P. (2002). SMOTE: Synthetic minority over-sampling technique. Journal of Artificial Intelligence Research, 16, 321-357.

Feldman, M., Friedler, S. A., Moeller, J., Scheidegger, C., & Venkatasubramanian, S. (2015). Certifying and removing disparate impact. In Proceedings of the 21st ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (pp. 259-268).

Ghosh, A., Chhabra, A., Ling, S. Y., Narayanan, P., & Koyejo, S. (2021). An intersectional framework for fair and accurate AI. arXiv preprint arXiv:2105.01280.