Part 4: Prediction-Level Interventions (Post-Processing)

Context

Post-processing interventions fix fairness issues after model training, offering solutions even for already-deployed systems.

This Part explores techniques that transform model outputs to satisfy fairness criteria without retraining. You'll learn to adjust decision thresholds, recalibrate probability estimates, and transform scores to achieve fairness. Many organizations face practical constraints that make model retraining costly or impossible—deployed models serving thousands of users, legacy systems resistant to change, or regulatory environments requiring extensive validation for new models.

Threshold optimization sets different decision boundaries for different groups. A loan approval system might apply one threshold to male applicants and another to female applicants, ensuring equal opportunity despite different score distributions. Hardt et al. (2016) show how post-processing can achieve equal error rates across groups without sacrificing predictive power.

Calibration ensures probability estimates mean the same thing across groups. When a credit model says there's a 20% default risk, that probability should reflect reality regardless of the applicant's demographic attributes. Pleiss et al. (2017) demonstrate how miscalibration creates uneven impact when decisions rely on probability thresholds.

These techniques connect to different fairness definitions. Score transformation serves demographic parity; threshold adjustment achieves equal opportunity; calibration ensures equalized odds. Your choice shapes who gets loans, jobs, or housing.

The Post-Processing Fairness Toolkit you'll develop in Unit 5 represents the fourth component of the Fairness Intervention Playbook (Sprint Project). This toolkit will help you select appropriate post-processing techniques based on fairness goals, model outputs, and operational constraints.

Learning Objectives

By the end of this Part, you will be able to:

- Implement threshold optimization for fairness criteria. You will develop methods that identify optimal decision thresholds for different groups, enabling fair decisions in classification systems while maintaining performance.

- Design calibration techniques that equalize error meaning across groups. You will create approaches ensuring probability estimates have consistent interpretation regardless of protected attributes, preventing disparate impact in risk assessment applications.

- Apply score transformation methods that satisfy fairness constraints. You will implement techniques that modify raw scores to achieve fairness goals while preserving predictive ordering, balancing fairness with usefulness.

- Evaluate trade-offs between different post-processing approaches. You will assess how various techniques affect both fairness metrics and business objectives, making informed decisions about which interventions best suit specific contexts.

- Integrate post-processing methods into production environments. You will develop strategies for implementing fairness adjustments in deployed systems, creating practical solutions that improve fairness without system disruption.

Units

Unit 1

Unit 1: Threshold Optimization Techniques

1. Conceptual Foundation and Relevance

Guiding Questions

- Question 1: How can we adjust classification thresholds after model training to satisfy specific fairness definitions without requiring retraining or model modification?

- Question 2: What are the mathematical relationships between threshold selection and different fairness metrics, and how do we navigate the inherent trade-offs between them?

Conceptual Context

Threshold optimization represents one of the most accessible and powerful fairness interventions in your technical toolkit. Unlike pre-processing or in-processing techniques that require modifying data or model architecture, threshold optimization works directly with model outputs, making it applicable even to black-box models or systems where retraining is impractical.

This approach matters because classification decisions in machine learning typically involve applying a threshold to probability scores (e.g., classifying as "positive" when probability > 0.5). The critical insight is that using a single threshold for all demographic groups often perpetuates or amplifies existing biases, even when the underlying probability estimates are well-calibrated. By setting different thresholds for different groups, you can directly address disparities in false positive and false negative rates while minimizing accuracy loss.

As Hardt, Price, and Srebro (2016) demonstrated in their seminal paper, group-specific thresholds can satisfy fairness criteria such as equal opportunity or equalized odds without modifying the underlying model. This makes threshold optimization particularly valuable when working with fixed models, black-box systems, or environments where deployment speed matters more than theoretical elegance.

This Unit builds on the causal understanding you developed in Part 1 by providing concrete techniques to address specific fairness issues identified through causal analysis. It complements the pre-processing approaches from Part 2 and in-processing methods from Part 3 by offering interventions that can be applied after training is complete. The threshold optimization techniques you learn here will form a critical component of the Post-processing Calibration Guide you'll develop in Unit 5, enabling rapid fairness interventions in operational systems.

2. Key Concepts

Decision Boundaries and Classification Thresholds

Classification thresholds determine how continuous model outputs translate into discrete decisions. This concept forms the foundation of threshold optimization because adjusting these thresholds directly influences which instances receive positive versus negative predictions, affecting fairness metrics that depend on error distributions.

In standard practice, a single threshold (typically 0.5 for predicted probabilities) applies to all individuals regardless of group membership. This approach implicitly assumes that the error costs and optimal decision boundary are identical across groups. However, this assumption rarely holds in practice due to historical biases, data disparities, and differing base rates across demographic groups.

As Corbett-Davies et al. (2017) established, "when a single classification rule is applied to groups with different risk distributions, the resulting error rates will generally differ across groups, potentially violating fairness constraints." This occurs because the distributions of predicted probabilities often differ systematically between demographic groups due to both legitimate risk differences and algorithmic biases.

For example, in a loan approval system, the distribution of predicted default probabilities might differ between racial groups due to historical lending discrimination that affected the training data. Applying the same threshold to these different distributions produces disparate error rates—potentially denying loans to qualified applicants from disadvantaged groups at higher rates than others.

Threshold optimization addresses this issue by allowing different thresholds for different groups, directly targeting specific fairness metrics through these adjustments. This concept interacts directly with the mathematical fairness definitions from Part 2, providing an implementation pathway for definitions like demographic parity, equal opportunity, and equalized odds.

Mathematical Optimization for Fairness Criteria

Different fairness definitions translate into distinct mathematical objectives for threshold optimization. This concept is crucial because it enables you to tailor your threshold adjustments to the specific fairness criteria most relevant to your application.

Hardt et al. (2016) provided the formal framework for this approach, showing that for binary classification, we can achieve equal opportunity by selecting thresholds that equalize true positive rates across groups. Similarly, we can satisfy equalized odds by choosing thresholds that equalize both true positive rates and false positive rates. Demographic parity requires thresholds that produce equal selection rates regardless of group membership.

These objectives can be formalized as constraint optimization problems:

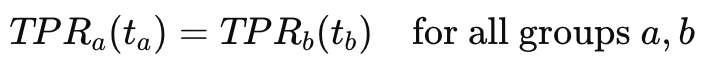

For equal opportunity, we seek thresholds tₐ for each group a such that:

For equalized odds, we extend this to include false positive rates:

For demographic parity, we aim for equal selection rates:

These objectives often conflict with performance metrics like accuracy or aggregate utility. Corbett-Davies et al. (2017) formalized this as a constrained optimization problem: maximize utility subject to fairness constraints, or equivalently, minimize the deviation from a desired fairness metric subject to utility constraints.

This mathematical framework enables you to choose appropriate thresholds that balance fairness requirements against performance objectives, making principled trade-offs rather than applying arbitrary adjustments.

ROC Curves and Fairness-Performance Trade-offs

Receiver Operating Characteristic (ROC) curves visualize the trade-off between true positive rates and false positive rates as a classification threshold varies. This concept is essential for threshold optimization because it provides a graphical framework for understanding how threshold adjustments affect both fairness and performance metrics.

Each point on the ROC curve represents the TPR and FPR achieved at a particular threshold value. Different demographic groups typically have different ROC curves, reflecting how the same model performs differently across groups. These group-specific curves form the basis for threshold optimization by showing all possible operating points for each group.

Kleinberg, Mullainathan, and Raghavan (2016) demonstrated that these trade-offs are often unavoidable due to mathematical impossibility results—in most real-world scenarios, we cannot simultaneously satisfy multiple fairness criteria while maintaining perfect prediction quality. This forces explicit choices about which fairness and performance metrics to prioritize.

For example, when optimizing thresholds for equal opportunity, you typically accept some reduction in overall accuracy to achieve equality in true positive rates. The ROC framework helps visualize this trade-off by showing how moving to different operating points on each group's curve affects both fairness and performance metrics.

The key insight is that threshold optimization doesn't eliminate the fundamental trade-offs between competing objectives—it simply provides a framework for navigating them explicitly. As Friedler et al. (2019) note, "post-processing approaches like threshold optimization make these trade-offs transparent, allowing practitioners to make informed decisions about the balance between fairness and utility."

Implementation With and Without Protected Attributes

Threshold optimization implementations vary based on whether protected attributes are available at decision time. This practical consideration is crucial because many applications face legal or ethical restrictions on using protected attributes during deployment, even for fairness purposes.

When protected attributes are available, you can implement group-specific thresholds directly, applying different decision rules based on group membership. As demonstrated by Hardt et al. (2016), this approach provides the most flexible optimization, allowing you to target specific fairness metrics precisely.

However, many contexts prohibit the use of protected attributes in decision-making, even for bias mitigation. For these scenarios, researchers have developed techniques that approximate group-specific thresholds without requiring protected attributes at decision time:

- Derived features approaches: As explored by Dwork et al. (2018), you can train a model to predict the appropriate threshold based on non-protected features that correlate with group membership but are legally permissible to use.

- Multiple threshold schemes: Lipton, Wang, and Smola (2018) demonstrated how multiple thresholds applied to all individuals can approximate group-fairness properties without explicit group identification.

These implementation variations allow you to apply threshold optimization even under legal constraints, though with some reduction in optimization flexibility. The Post-processing Calibration Guide you'll develop will need to include decision frameworks for selecting appropriate implementation approaches based on these practical constraints.

Domain Modeling Perspective

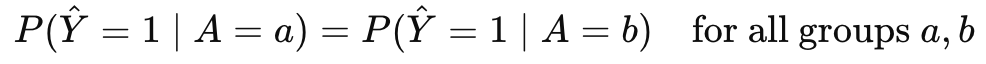

Threshold optimization connects to specific components of ML systems:

- Prediction Interface: Thresholds determine how continuous model outputs translate into discrete decisions that affect users.

- Fairness Evaluation: Different threshold choices directly impact fairness metrics like demographic parity, equal opportunity, and equalized odds.

- Deployment Pipeline: Threshold implementations must integrate with production systems, potentially alongside other fairness interventions.

- Monitoring Framework: Thresholds may require adjustment over time as data distributions change.

- Compliance Documentation: Threshold choices should be documented with clear rationales for audit and transparency purposes.

This domain mapping helps you understand how threshold optimization fits within your broader ML system architecture. Unlike interventions that modify data or models, threshold adjustments operate at the prediction interface, making them particularly suitable for integration into existing deployment pipelines.

Conceptual Clarification

To clarify threshold optimization, consider these analogies:

- Threshold optimization is like adjusting the qualifying standards for different tracks in a high school based on resource disparities. A school with well-funded and under-resourced tracks might set different qualifying times for a state competition to ensure students from both tracks have equal opportunity to qualify, recognizing that the same standard applied to uneven preparation conditions would perpetuate existing advantages. Similarly, group-specific thresholds adjust decision boundaries to account for historical disparities and different error patterns across groups.

- The fairness-performance trade-off in threshold optimization resembles balancing precision and recall in search engine design. Just as search engineers must decide whether to show more results (higher recall but lower precision) or fewer, more relevant results (higher precision but lower recall), fairness practitioners must navigate trade-offs between different error types across groups. No single operating point optimizes all metrics simultaneously, forcing explicit choices based on application priorities.

- Group-specific thresholds without protected attributes function like progressive taxation without requiring individual income disclosure. Just as tax systems can implement income-sensitive policies through bracket structures that apply to everyone (but affect different income groups differently), threshold systems can implement multiple decision boundaries that effectively address group disparities without explicitly identifying group membership at decision time.

Intersectionality Consideration

Traditional threshold optimization approaches often address protected attributes independently, potentially missing unique fairness concerns at intersections of multiple identities. As Buolamwini and Gebru (2018) demonstrated in their "Gender Shades" research, models may perform adequately when evaluated on single attributes (e.g., gender or race separately) while showing significant disparities at intersections (e.g., specific combinations of gender and race).

Applying threshold optimization to address intersectional fairness requires:

- Multi-dimensional threshold spaces that consider all relevant demographic combinations rather than optimizing for each protected attribute separately.

- Statistical techniques for smaller groups since intersectional categories often have limited samples, creating challenges for reliable threshold estimation.

- Prioritization frameworks for determining which intersectional disparities most urgently require threshold adjustment based on both statistical significance and ethical considerations.

- Monitoring approaches that track how threshold adjustments affect all demographic intersections, not just the main groups.

For example, a facial recognition system might show different error patterns for young Black women compared to either young women overall or Black people overall. Addressing this requires threshold optimization that explicitly considers these intersectional categories rather than adjusting thresholds based solely on gender or race independently.

The Post-processing Calibration Guide you'll develop must incorporate these intersectional considerations to ensure that threshold optimizations improve fairness across all demographic subgroups rather than simply addressing disparities between main groups while leaving intersectional concerns unaddressed.

3. Practical Considerations

Implementation Framework

To effectively implement threshold optimization for fairness, follow this structured methodology:

-

Fairness Criteria Selection:

-

Determine which fairness definition (demographic parity, equal opportunity, equalized odds) is most appropriate for your application context.

- Establish whether you need to optimize for a single fairness metric or balance multiple criteria.

-

Document your selection rationale, considering both ethical principles and practical constraints.

-

Threshold Calculation:

-

For demographic parity: Find thresholds that equalize selection rates across groups.

- For equal opportunity: Identify thresholds that equalize true positive rates.

- For equalized odds: Calculate thresholds that equalize both true positive and false positive rates (may require randomization between two thresholds for some groups).

-

Implement these calculations using validation data separate from both training and test sets.

-

Trade-off Analysis:

-

Quantify how different threshold choices affect both fairness metrics and performance measures.

- Generate the fairness-performance Pareto frontier showing all efficient operating points.

- Select final thresholds based on explicit prioritization of competing objectives.

-

Document the trade-offs inherent in your selected thresholds for transparency.

-

Deployment Strategy:

-

Determine whether protected attributes can be used at decision time in your application.

- If available, implement direct group-specific thresholds.

- If unavailable, develop proxy approaches or multiple threshold schemes that approximate group-fairness properties without explicit group identification.

- Create clear implementation documentation for integration into production systems.

This methodology integrates with standard ML workflows by operating after model training is complete, making it compatible with existing deployment pipelines. While introducing some additional complexity in decision logic, it requires minimal changes to upstream model development processes.

Implementation Challenges

When implementing threshold optimization, practitioners commonly face these challenges:

-

Legal and Ethical Constraints: Many jurisdictions limit the use of protected attributes in decision-making, even for bias mitigation. Address this by:

-

Consulting legal expertise to determine what's permissible in your specific context.

- Developing implementations that approximate group-specific thresholds without requiring protected attributes at decision time.

- Creating clear documentation of fairness justifications for any use of protected attributes.

-

Exploring alternative fairness interventions when threshold optimization faces insurmountable legal barriers.

-

Threshold Stability: Thresholds optimized on validation data may perform differently on production data due to distribution shifts. Address this by:

-

Implementing robust cross-validation approaches to estimate threshold stability.

- Establishing monitoring systems that detect when threshold adjustments no longer achieve intended fairness improvements.

- Developing procedures for periodic threshold recalibration based on recent production data.

- Building confidence intervals around threshold estimates to account for statistical uncertainty.

Successfully implementing threshold optimization requires resources including validation data with reliable protected attribute information, statistical expertise for threshold calculation and evaluation, and monitoring infrastructure for ongoing fairness assessment. The resource requirements are generally lower than for pre-processing or in-processing approaches since you don't need to modify or retrain models.

Evaluation Approach

To assess whether your threshold optimization effectively improves fairness, implement these evaluation strategies:

-

Fairness Metrics Evaluation:

-

Measure the target fairness metrics (e.g., demographic parity difference, equal opportunity difference) before and after threshold adjustment.

- Calculate confidence intervals around these metrics to assess statistical significance of improvements.

- Evaluate fairness across intersectional demographic categories, not just main groups.

-

Verify that threshold adjustments improve fairness metrics on held-out test data, not just validation data.

-

Performance Impact Assessment:

-

Quantify changes in overall accuracy, precision, recall, F1-score, or application-specific utility metrics after threshold adjustment.

- Evaluate performance changes for each demographic group separately to ensure no group experiences unacceptable performance degradation.

- Calculate the efficiency of your threshold adjustments by measuring fairness improvement per unit of performance reduction.

- Compare with alternative fairness interventions (pre-processing, in-processing) to determine relative efficiency.

These evaluation approaches should be integrated with your organization's broader fairness assessment framework, providing quantitative measures of both fairness improvements and performance impacts resulting from threshold adjustments.

4. Case Study: Loan Application System

Scenario Context

A financial institution has deployed a machine learning model to predict default risk for loan applications. The model produces a probability score between 0 and 1, with higher scores indicating higher predicted default risk. Loan applications are rejected when this score exceeds a threshold, originally set at 0.5 for all applicants.

Initial fairness analysis revealed significant disparities: the model shows a true positive rate (correctly identifying non-defaulting applicants) of 85% for one racial group but only 70% for another, despite similar actual default rates. This disparity could unfairly deny loans to qualified applicants from the disadvantaged group.

Multiple stakeholders are involved: risk management teams concerned with maintaining accurate default prediction, regulatory compliance officers monitoring fair lending requirements, and business leaders balancing growth with risk management. The Equal Credit Opportunity Act explicitly prohibits discrimination in lending, creating clear legal requirements for fairness.

The team decided to explore threshold optimization as a potential solution since retraining the model would require months of development and validation, while threshold adjustments could be implemented quickly without disrupting existing systems.

Problem Analysis

Applying threshold optimization concepts to this scenario reveals several key insights:

- Fairness Definition Analysis: Since the primary concern involves qualified applicants (non-defaulters) being incorrectly rejected, equal opportunity emerges as the most appropriate fairness definition. This aligns with both ethical principles of fair access to credit and regulatory requirements that prohibit disparate impact on protected groups.

- ROC Analysis: Examining the ROC curves for each racial group shows that the same threshold (0.5) produces different true positive and false positive rates across groups. This occurs because the probability distributions differ between groups—likely reflecting historical discrimination patterns in lending data that affected model training.

- Trade-off Evaluation: Computing the fairness-performance Pareto frontier reveals that perfect equality in true positive rates would require setting the threshold at 0.65 for the advantaged group and 0.45 for the disadvantaged group. This adjustment would reduce the bank's overall profit by approximately 3% due to both additional defaults and missed lending opportunities, though it would improve fairness significantly.

- Implementation Constraint Assessment: Legal counsel advised that explicitly using race in lending decisions—even for bias mitigation—could violate regulatory guidelines. This constrained the implementation options for threshold optimization, requiring approaches that achieve fairness improvements without direct access to protected attributes at decision time.

From an intersectional perspective, the analysis revealed that the disparity was particularly pronounced for younger applicants within the disadvantaged racial group, with true positive rates as low as 60% for this intersection. This highlighted the need for threshold approaches that address intersectional concerns, not just disparities between main racial groups.

Solution Implementation

To address these fairness issues through threshold optimization, the team implemented a structured approach:

-

Equal Opportunity Threshold Calculation:

-

They calculated group-specific thresholds on a validation dataset containing protected attributes: 0.65 for the advantaged group and 0.45 for the disadvantaged group.

- These thresholds equalized the true positive rate across racial groups at approximately 80%, representing a compromise between the original 85% and 70% rates.

-

Statistical validation confirmed that these thresholds significantly reduced the disparity without introducing new fairness issues.

-

Proxy-Based Implementation:

-

Since direct use of race for threshold adjustment raised regulatory concerns, they implemented a proxy-based approach.

- They identified legally permissible application features that correlated with group membership and trained a small threshold selection model using these features.

-

This model effectively assigned appropriate thresholds without explicitly using protected attributes at decision time, achieving approximately 90% of the fairness benefit of the direct approach.

-

Intersectional Consideration:

-

For the younger subgroup within the disadvantaged racial group, they identified an adjusted threshold that specifically addressed the more severe disparity faced by this intersection.

-

The proxy model incorporated age as a legally permissible feature, allowing it to partially address this intersectional concern without directly using race.

-

Monitoring Framework:

-

They established ongoing monitoring of true positive rates across all demographic groups and intersections.

- The system included alerts for when disparities exceeded predefined thresholds, triggering review and potential threshold recalibration.

- Quarterly compliance reviews assessed both fairness metrics and business impact of the threshold adjustments.

Throughout implementation, they maintained detailed documentation of the fairness rationale for each threshold adjustment, creating an audit trail for both internal governance and potential regulatory review.

Outcomes and Lessons

The threshold optimization approach yielded several key results:

- The equal opportunity disparity between racial groups decreased by 80%, from a 15 percentage point gap to approximately 3 points.

- The proxy-based implementation successfully approximated the fairness benefits of direct group-specific thresholds while maintaining regulatory compliance.

- The overall financial impact was less than initially projected—a 1.8% reduction in profit rather than 3%—because the increased lending to qualified applicants in the disadvantaged group partially offset losses from threshold adjustments.

- The most severe intersectional disparities saw improvement, though not complete resolution, suggesting that threshold optimization alone might be insufficient for addressing complex intersectional fairness issues.

The implementation also revealed important challenges and lessons:

- The proxy-based approach required careful legal review to ensure it didn't create "disparate treatment by proxy" concerns.

- Initial threshold settings required adjustment after two months due to seasonal variations in applicant distributions, highlighting the need for ongoing monitoring and recalibration.

- Communicating the fairness-performance trade-offs to business stakeholders proved challenging, requiring concrete dollar figures rather than abstract statistical metrics.

These insights directly inform the Post-processing Calibration Guide by highlighting both the power and limitations of threshold optimization. While it provided rapid fairness improvements without model retraining, addressing complex intersectional disparities ultimately required combining threshold optimization with longer-term data collection and model improvement initiatives.

5. Frequently Asked Questions

FAQ 1: Group-Specific Thresholds Vs. Single Threshold

Q: Doesn't using different thresholds for different groups violate the principle of treating everyone equally? How do we justify this approach ethically and legally?

A: This question touches on the fundamental distinction between procedural fairness (equal treatment) and outcome fairness (equal impact). Using a single threshold appears procedurally fair because everyone faces the same decision rule, but it often produces unfair outcomes when underlying score distributions differ across groups due to historical biases or measurement disparities. Group-specific thresholds adjust the decision boundary to account for these systematic differences, ultimately creating more equitable outcomes. Legally, many anti-discrimination frameworks recognize this distinction through concepts like "disparate impact" in US law, which focuses on discriminatory effects regardless of intent. Several legal precedents support adjustments that reduce discriminatory impact, provided they're well-justified and narrowly tailored to that purpose. The key to ethical and legal justification lies in documenting: (1) evidence of existing disparities under a single threshold, (2) how these disparities connect to historical discrimination patterns, (3) that your threshold adjustments specifically target these disparities, and (4) that you've considered alternatives and found threshold optimization to be the most appropriate solution. This documented reasoning provides both ethical justification and potential legal defense if your approach is questioned.

FAQ 2: Threshold Optimization Without Protected Attributes

Q: How can we implement threshold optimization when we don't have access to protected attributes during deployment, or when using such attributes would violate regulations?

A: You can implement threshold optimization without protected attributes at decision time through several approaches: First, consider a "derived features" strategy where you develop a threshold selection model trained on validation data with protected attributes but deployed using only permissible features that correlate with group membership. This model effectively learns to assign appropriate thresholds without explicitly using protected attributes. Second, implement a "multiple threshold scheme" where you apply several different thresholds to all individuals based on score ranges, carefully designed to approximate group-fairness properties without group identification. For example, using higher thresholds for high-scoring individuals and lower thresholds for borderline cases often improves fairness metrics even without group labels. Third, explore "adversarially fair representations" that transform prediction scores to remove correlations with protected attributes while preserving legitimate risk information. Whichever approach you choose, verify its effectiveness through counterfactual analysis on validation data with protected attributes, and document your methodology carefully to demonstrate good-faith fairness efforts despite constraints. Remember that these techniques typically achieve 70-90% of the fairness benefit of direct group-specific thresholds, representing significant improvement even when protected attributes cannot be used directly.

6. Project Component Development

Component Description

In Unit 5, you will develop the threshold optimization section of the Post-processing Calibration Guide. This component will provide a structured methodology for selecting, calculating, and implementing optimal thresholds to satisfy specific fairness criteria across demographic groups.

The deliverable will include mathematical formulations, implementation algorithms, evaluation methodologies, and decision frameworks for determining when threshold optimization is the most appropriate fairness intervention.

Development Steps

- Develop a Fairness-Specific Threshold Calculation Framework: Create step-by-step procedures for calculating optimal thresholds for different fairness definitions (demographic parity, equal opportunity, equalized odds). Include both analytical approaches for simple cases and numerical optimization methods for complex scenarios.

- Design a Comprehensive Trade-off Analysis Approach: Build methodologies for explicitly quantifying the trade-offs between fairness improvements and performance impacts. Include visualization templates, Pareto frontier calculation algorithms, and decision frameworks for selecting operating points based on application priorities.

- Create Implementation Patterns for Different Constraints: Develop implementation algorithms for both scenarios where protected attributes are available at decision time and those where they aren't. Include proxy-based approaches, multiple threshold schemes, and guidance for selecting between them based on legal and operational constraints.

Integration Approach

This threshold optimization component will interface with other parts of the Post-processing Calibration Guide by:

- Building on the calibration approaches from Unit 2, which ensure predicted probabilities have consistent meaning before threshold adjustment.

- Establishing foundations for the more general transformation methods in Unit 3, which extend beyond simple thresholds to more complex modifications.

- Creating decision frameworks that help determine when threshold optimization is preferable to other post-processing techniques like calibration or rejection classification.

To enable successful integration, use consistent mathematical notation across components, clearly document assumptions and dependencies, and establish standard evaluation metrics that apply across different post-processing techniques.

7. Summary and Next Steps

Key Takeaways

This Unit has equipped you with a comprehensive understanding of threshold optimization for fairness. Key insights include:

- Threshold adjustment power: You've learned how simply changing decision thresholds after training can significantly improve fairness metrics without requiring model modification or retraining, making it one of the most accessible and flexible fairness interventions.

- Mathematical foundations: You now understand the precise mathematical relationships between threshold selection and fairness metrics like demographic parity, equal opportunity, and equalized odds, enabling you to target specific fairness definitions through appropriate threshold adjustments.

- Trade-off navigation: You've developed frameworks for explicitly quantifying and navigating the inevitable trade-offs between fairness improvements and performance impacts, allowing for principled decisions rather than arbitrary adjustments.

- Implementation variations: You've explored approaches for implementing threshold optimization both with and without access to protected attributes at decision time, providing solutions for different regulatory and operational constraints.

These concepts directly address our guiding questions by showing how threshold adjustments can satisfy specific fairness definitions without retraining, while providing frameworks for navigating the mathematical relationships and trade-offs between different fairness and performance metrics.

Application Guidance

To apply threshold optimization in your practical work:

- Begin by determining which fairness definition (demographic parity, equal opportunity, equalized odds) best aligns with your application's ethical requirements and regulatory context.

- Generate ROC curves for each demographic group to visualize how different thresholds affect error rates across groups and identify potential operating points.

- Calculate group-specific thresholds that satisfy your selected fairness criteria, and quantify the resulting fairness improvements and performance impacts.

- Assess whether your application allows for the use of protected attributes at decision time, and implement either direct group-specific thresholds or proxy approaches based on this constraint.

For organizations new to fairness interventions, threshold optimization offers an excellent starting point due to its simplicity, flexibility, and minimal disruption to existing systems. Even if you eventually implement more sophisticated fairness approaches, threshold optimization provides immediate improvements while more complex interventions are developed.

Looking Ahead

In the next Unit, we will build on threshold optimization by exploring calibration across groups. While threshold optimization adjusts decision boundaries to equalize error rates, calibration ensures that the underlying probability estimates have consistent meaning across demographic groups. This complementary approach addresses a different aspect of fairness—the interpretation of model confidence rather than just binary decisions.

The threshold optimization techniques you've learned here provide a foundation for understanding how post-processing interventions can improve fairness. Calibration will extend this foundation by addressing the probability values themselves rather than just the thresholds applied to them, creating more comprehensive fairness improvements across both binary decisions and confidence scores.

References

Buolamwini, J., & Gebru, T. (2018). Gender shades: Intersectional accuracy disparities in commercial gender classification. In Proceedings of the 1st Conference on Fairness, Accountability, and Transparency (pp. 77–91).

Corbett-Davies, S., Pierson, E., Feller, A., Goel, S., & Huq, A. (2017). Algorithmic decision making and the cost of fairness. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (pp. 797–806).

Dwork, C., Immorlica, N., Kalai, A. T., & Leiserson, M. D. (2018). Decoupled classifiers for group-fair and efficient machine learning. In Proceedings of the 1st Conference on Fairness, Accountability, and Transparency (pp. 119–133).

Friedler, S. A., Scheidegger, C., Venkatasubramanian, S., Choudhary, S., Hamilton, E. P., & Roth, D. (2019). A comparative study of fairness-enhancing interventions in machine learning. In Proceedings of the Conference on Fairness, Accountability, and Transparency (pp. 329–338).

Hardt, M., Price, E., & Srebro, N. (2016). Equality of opportunity in supervised learning. In Advances in Neural Information Processing Systems (pp. 3315–3323).

Kleinberg, J., Mullainathan, S., & Raghavan, M. (2016). Inherent trade-offs in the fair determination of risk scores. In Proceedings of the 8th Innovations in Theoretical Computer Science Conference (pp. 43:1–43:23).

Lipton, Z. C., Wang, Y. X., & Smola, A. (2018). Detecting and correcting for label shift with black box predictors. In Proceedings of the 35th International Conference on Machine Learning (pp. 3128–3136).

Unit 2

Unit 2: Calibration Across Groups

1. Conceptual Foundation and Relevance

Guiding Questions

- Question 1: How do we ensure predicted probabilities convey consistent meaning across demographic groups?

- Question 2: How can we address calibration disparities without sacrificing other fairness properties?

Conceptual Context

When models produce probability scores, those scores should mean the same thing regardless of who receives them. A 70% probability of default should represent the same risk whether the applicant is young or old, male or female. Yet many seemingly accurate models produce miscalibrated probabilities across demographic groups, creating a subtle but pernicious form of algorithmic unfairness.

This calibration problem matters because probability scores drive high-stakes decisions in lending, healthcare, criminal justice, and hiring. When a model assigns a 70% risk to one demographic group but that risk actually represents an 85% likelihood, while accurately assessing another group's 70% risk, it creates fundamentally unfair treatment that's invisible to standard accuracy metrics. As Pleiss et al. (2017) demonstrated, models with identical accuracy can exhibit substantial calibration disparities across groups, requiring specific interventions to ensure consistent interpretation.

This Unit builds directly upon the threshold optimization techniques from Unit 1, which focused on adjusting decision boundaries to achieve fairness. While threshold adjustments address binary decisions, calibration addresses the underlying probability estimates themselves. The calibration techniques you'll learn here will directly inform the Post-processing Calibration Guide you'll develop in Unit 5, providing methodology for ensuring probability outputs have consistent meaning across all demographic groups.

2. Key Concepts

Calibration as a Fairness Criterion

Calibration refers to the alignment between predicted probabilities and observed outcomes. A perfectly calibrated model ensures that among all instances assigned a predicted probability of p%, exactly p% actually belong to the positive class. This concept forms a distinct fairness criterion that differs from error rate parity or demographic parity, focusing instead on the reliability of probability estimates across groups.

Calibration connects directly to fairness because miscalibrated predictions across demographic groups create inconsistent treatment, even when decision thresholds remain constant. It interacts with other fairness concepts by introducing a different dimension of equity—one focused on the interpretation of model outputs rather than just the decisions derived from them.

As Kleinberg, Mullainathan, and Raghavan (2016) demonstrated in their seminal work, calibration represents one of three core fairness properties (alongside balance for the positive and negative classes) that cannot be simultaneously satisfied in most real-world scenarios. This "impossibility theorem" proved that perfect calibration typically conflicts with equal false positive and false negative rates across groups when base rates differ, forcing practitioners to prioritize which fairness properties matter most in specific contexts.

The practical implication is significant: a lending model might accurately predict default rates for different demographic groups in aggregate, but systematically underestimate risk for some applicants while overestimating it for others. Even with identical decision thresholds, this miscalibration creates fundamentally unequal treatment because the same score means different things for different people.

For the Post-processing Calibration Guide you'll develop in Unit 5, understanding calibration as a distinct fairness criterion will help you guide practitioners in determining when to prioritize calibration over other fairness properties and how to navigate the inevitable trade-offs that arise.

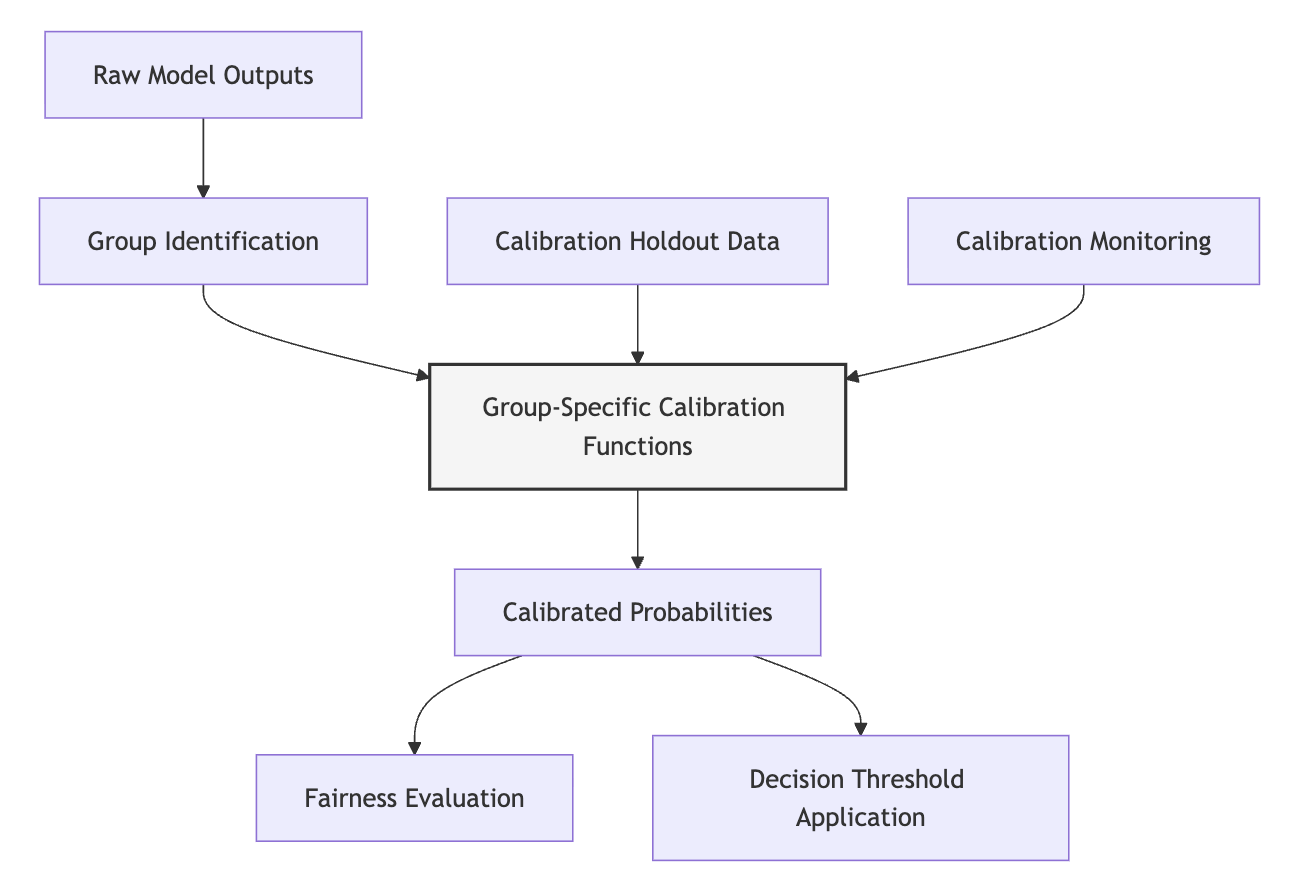

Group-Specific Calibration Techniques

Multiple technical approaches exist for achieving calibration across demographic groups, each with distinct strengths and implementation considerations. This concept is central to AI fairness because it provides the practical methodology for addressing miscalibration after a model has been trained.

Group-specific calibration builds on the understanding that miscalibration patterns often differ across demographic groups. It interacts with threshold optimization from Unit 1 by providing adjusted probability scores that can then be used with optimized thresholds for comprehensive fairness improvements.

Several established techniques address group calibration:

- Platt Scaling: This approach fits a logistic regression model to transform raw model outputs into calibrated probabilities. For group-specific calibration, separate logistic models are trained for each demographic group. As shown by Platt (1999) and adapted for fairness by Pleiss et al. (2017), this simple approach effectively addresses many calibration disparities.

- Isotonic Regression: This non-parametric technique fits a piecewise constant function that transforms raw scores into calibrated probabilities while maintaining rank order. Zadrozny and Elkan (2002) demonstrated its effectiveness for general calibration, while later fairness research applied it to group-specific calibration.

- Beta Calibration: This approach uses a parametric beta distribution to model the relationship between predictions and outcomes, offering advantages for naturally bounded probability estimates. Kull, Silva Filho, and Flach (2017) showed its effectiveness for calibrating probabilistic classifiers.

- Temperature Scaling: A simple but effective technique that divides logits by a single parameter (temperature) before applying the softmax function. Guo et al. (2017) demonstrated its effectiveness for neural network calibration, and it can be applied separately for each demographic group.

For the Post-processing Calibration Guide, these techniques provide the core methodology for implementing calibration across groups. Understanding their relative strengths helps practitioners select appropriate approaches based on their specific model types and data characteristics.

Calibration Evaluation Metrics

Proper evaluation of calibration requires specialized metrics that differ from standard accuracy measures. This concept is crucial for AI fairness because it enables quantitative assessment of calibration disparities and the effectiveness of calibration interventions.

Calibration evaluation connects to the other fairness metrics explored in previous Units by providing complementary measures focused specifically on probability reliability. It interacts with the implementation techniques by enabling comparative assessment of different calibration approaches.

Key calibration metrics include:

- Expected Calibration Error (ECE): This metric measures the difference between predicted probabilities and actual frequencies, calculated by dividing predictions into bins and computing a weighted average of the absolute difference between average predicted probability and observed frequency in each bin. Lower values indicate better calibration. Naeini, Cooper, and Hauskrecht (2015) formalized this widely-used metric.

- Maximum Calibration Error (MCE): Similar to ECE but focuses on the worst-case scenario by measuring the maximum calibration error across all bins. This metric highlights the most severe calibration issues.

- Reliability Diagrams: These visual tools plot predicted probabilities against observed frequencies, allowing visual assessment of calibration. A perfectly calibrated model would show points along the diagonal line. Kumar, Liang, and Ma (2019) demonstrated their utility for identifying specific regions of miscalibration.

- Group-Specific Calibration Metrics: For fairness applications, these standard metrics should be calculated separately for each demographic group, with significant disparities indicating calibration-based unfairness.

For the Post-processing Calibration Guide, these evaluation metrics provide essential tools for both identifying calibration disparities and assessing intervention effectiveness. They enable practitioners to quantify the calibration dimension of fairness and track improvements from specific interventions.

The Calibration-Fairness Trade-off

A fundamental tension exists between calibration and other fairness criteria, creating unavoidable trade-offs in most real-world scenarios. This concept is essential for AI fairness because it helps practitioners understand what's mathematically possible and make principled choices among competing fairness properties.

The calibration-fairness trade-off builds directly on the impossibility results established by Kleinberg et al. (2016), which proved that calibration, balance for the positive class, and balance for the negative class cannot be simultaneously satisfied except in degenerate cases. This creates a three-way trade-off between calibration and traditional fairness criteria like equal false positive rates.

Practical implications of this trade-off include:

- Decision Context Prioritization: In some settings (like risk assessment), calibration may be more important than equal error rates, while in others (like hiring), error rate parity might take precedence.

- Partial Satisfaction Approaches: Rather than perfect satisfaction of any criterion, practitioners often seek to minimize disparities across multiple fairness dimensions simultaneously.

- Stakeholder Communication: These mathematical impossibilities require clear explanation to non-technical stakeholders who might reasonably expect all fairness criteria to be satisfiable.

As Corbett-Davies and Goel (2018) argue in their analysis of risk assessment instruments, calibration often represents the most appropriate fairness criterion in contexts where probabilistic risk estimates directly inform decisions. Their work demonstrates that enforcing error rate parity can paradoxically harm the very groups it aims to protect when it comes at the expense of calibration.

For the Post-processing Calibration Guide, understanding these trade-offs is essential for helping practitioners make informed choices when perfect satisfaction of all criteria is mathematically impossible. The guide must provide clear decision frameworks for determining when to prioritize calibration over other fairness properties based on application context.

Domain Modeling Perspective

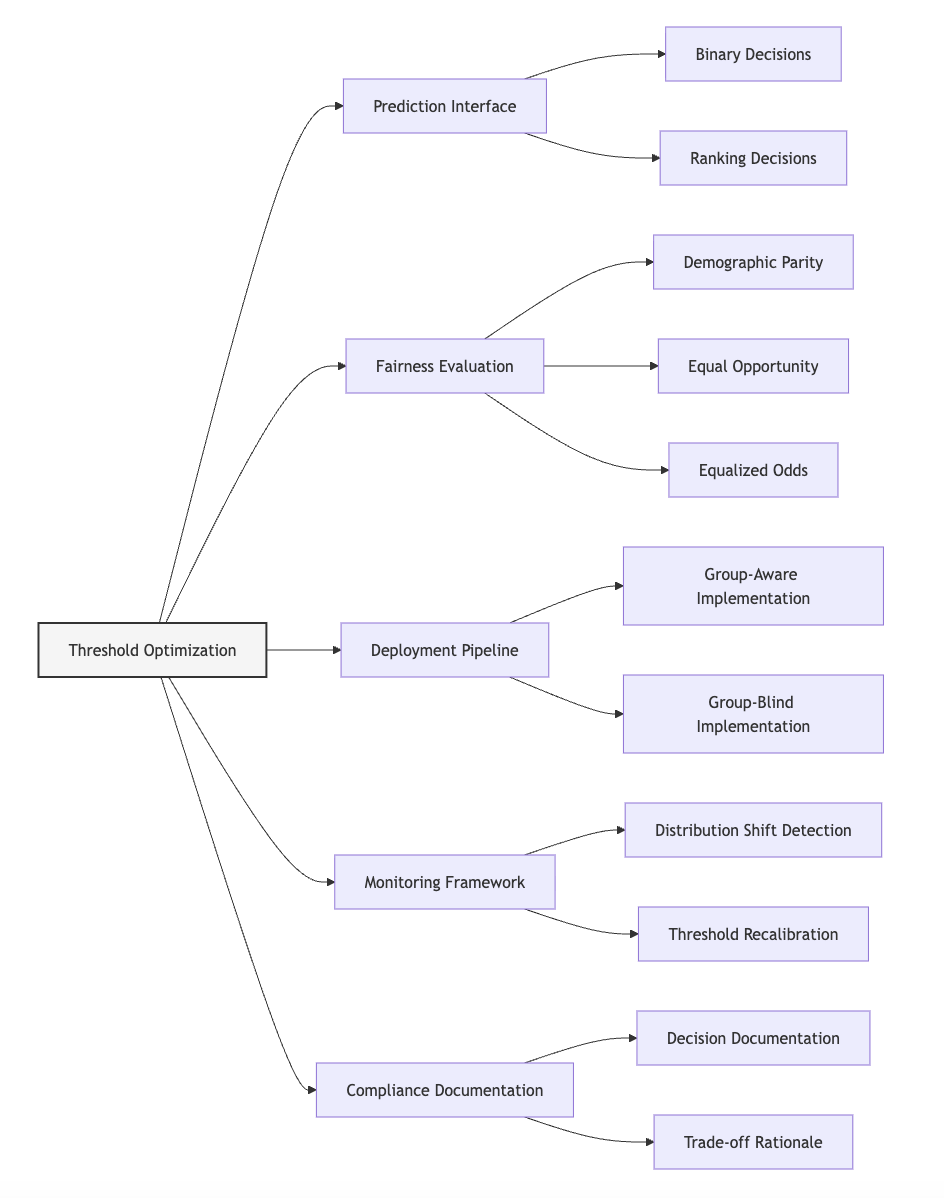

From a domain modeling perspective, calibration across groups maps to specific components of ML systems:

- Probability Calibration Layer: A post-processing component that transforms raw model outputs into calibrated probabilities.

- Group-Specific Transformation Functions: Separate calibration mappings for each demographic group.

- Calibration Dataset Management: A data component that maintains a holdout set for fitting calibration transformations.

- Calibration Evaluation Module: A system component that measures and monitors calibration quality across groups.

- Fairness Trade-off Manager: A governance component that navigates tensions between calibration and other fairness criteria.

This domain mapping helps you understand how calibration components integrate with the broader ML system rather than viewing them as isolated statistical adjustments. The Post-processing Calibration Guide will leverage this mapping to design interventions that fit within existing system architectures.

Conceptual Clarification

To clarify these abstract calibration concepts, consider the following analogies:

- Miscalibration across groups resembles inconsistent grading standards across different classrooms. Imagine two teachers giving the same letter grade "B" for significantly different levels of performance. A "B" from the strict teacher might represent mastery of 85% of the material, while a "B" from the lenient teacher might represent only 75% mastery. Similarly, a model that outputs a 70% risk score for different demographic groups might actually represent an 85% risk for one group and a true 70% risk for another—creating fundamental unfairness in how the same score is interpreted.

- Calibration techniques function like standardized grading curves that ensure consistent interpretation. Just as schools might adjust raw scores from different teachers to ensure a "B" represents the same level of achievement regardless of who assigned it, calibration techniques transform raw model outputs to ensure a 70% probability means the same thing regardless of which demographic group receives it.

- The calibration-fairness trade-off operates like balancing different principles of justice in a legal system. A legal system might value both consistent punishment for the same crime (similar to calibration) and equal rates of false conviction across groups (similar to error rate parity). When these principles conflict, the system must prioritize based on context rather than assuming both can be perfectly satisfied simultaneously.

3. Practical Considerations

Implementation Framework

To effectively implement calibration across demographic groups, follow this structured methodology:

-

Calibration Assessment:

-

Compute calibration metrics (ECE, MCE) separately for each demographic group.

- Create reliability diagrams showing calibration patterns for each group.

- Quantify disparities in calibration metrics to determine intervention necessity.

-

Document baseline calibration assessment before intervention.

-

Calibration Method Selection:

-

For parametric models with moderate miscalibration, implement Platt scaling with separate parameters for each group.

- For flexible, non-parametric calibration, apply isotonic regression individually to each group.

- For neural networks with systematic miscalibration, consider temperature scaling per group.

-

For complex miscalibration patterns, implement histogram binning or more sophisticated approaches.

-

Implementation Process:

-

Split data into training, calibration, and test sets to prevent leakage.

- Fit calibration transformations using the dedicated calibration dataset.

- Apply group-specific transformations to model outputs before making decisions.

-

Implement proper handling for previously unseen groups or edge cases.

-

Calibration Validation:

-

Evaluate post-calibration metrics on held-out test data.

- Compare calibration improvements against potential impacts on other fairness criteria.

- Verify that rank ordering within groups is preserved when needed.

- Document calibration outcomes across all demographic intersections.

These methodologies integrate with standard ML workflows by adding a post-processing step between model prediction and decision-making. While they add implementation complexity, they enable fairer interpretation of model outputs without requiring retraining.

Implementation Challenges

When implementing calibration across groups, practitioners commonly face these challenges:

-

Limited Samples for Minority Groups: Some demographic groups may have too few examples for reliable calibration curve fitting. Address this by:

-

Applying Bayesian calibration approaches that incorporate prior knowledge.

- Using smoothing techniques or regularization to prevent overfitting.

- Borrowing statistical strength across related groups when appropriate.

-

Clearly documenting uncertainty in calibration for groups with limited samples.

-

Deployment Complexities: Maintaining separate calibration curves for each group creates operational challenges. Address this by:

-

Implementing efficient lookup systems that apply the appropriate calibration transformation based on group membership.

- Creating fallback strategies for handling individuals with unknown or multiple group memberships.

- Developing monitoring systems that detect calibration drift over time.

- Establishing processes for periodic recalibration as data distributions evolve.

Successfully implementing calibration requires resources including a dedicated calibration dataset, computational infrastructure for group-specific transformations, and monitoring systems to track calibration quality over time. Organizations must also establish policies for determining which demographic dimensions require calibration and how to navigate the trade-offs with other fairness properties.

Evaluation Approach

To assess whether your calibration interventions are effective, implement these evaluation strategies:

-

Calibration Quality Assessment:

-

Calculate pre-intervention and post-intervention ECE and MCE for each group.

- Create reliability diagrams showing calibration improvements.

- Compute statistical significance of calibration changes.

-

Assess calibration across different probability ranges.

-

Trade-off Analysis:

-

Measure how calibration improvements affect other fairness metrics.

- Quantify changes in threshold-based fairness criteria after calibration.

- Evaluate the overall fairness-performance Pareto frontier.

- Document which fairness properties improved or degraded.

These evaluation approaches should be integrated with your organization's broader fairness assessment framework, providing a comprehensive view of how calibration interventions affect multiple fairness dimensions.

4. Case Study: Recidivism Risk Assessment

Scenario Context

A criminal justice agency uses a machine learning model to predict recidivism risk, helping judges make informed decisions about pretrial release, sentencing, and parole. The model produces probability scores indicating the likelihood of reoffending within two years, with higher scores suggesting greater risk. Initial fairness assessment revealed significant accuracy disparities across racial groups, prompting closer examination of model outputs.

This scenario involves critical fairness considerations because risk scores directly impact individuals' liberty and potentially reinforce historical patterns of discrimination in the criminal justice system. Stakeholders include judges who rely on these predictions, defendants whose freedom may depend on them, communities concerned about both public safety and equal treatment, and agency officials responsible for system fairness.

Problem Analysis

Applying calibration analysis revealed a critical fairness issue not captured by standard accuracy metrics:

- Group-Specific Calibration Disparities: While the model achieved similar overall accuracy across racial groups, reliability diagrams showed systematic miscalibration patterns. For Black defendants, the model consistently underestimated recidivism risk by 5-10 percentage points across most of the probability range. For Hispanic defendants, it overestimated risk by 7-12 percentage points, especially in the critical middle range (40-60%) where many decision thresholds are set.

- Interpretation Inconsistency: This meant that a Hispanic defendant receiving a 60% risk score actually represented about a 50% true risk, while a Black defendant with a 50% score represented closer to 58% true risk. Despite having the same decision threshold for all groups, these miscalibration patterns created fundamentally unfair treatment because the same score meant substantively different things depending on the defendant's race.

- Decision Impact Analysis: Further analysis revealed that these calibration disparities led to disproportionate outcomes. Hispanic defendants faced excessive detention due to overestimated risk, while Black defendants with higher actual risk were sometimes incorrectly released, potentially leading to both unfair confinement and public safety concerns.

The calibration disparities persisted even after trying initial threshold adjustments from Unit 1, demonstrating that decision boundary optimization alone was insufficient to address these interpretation inconsistencies.

From an intersectional perspective, the analysis revealed even more pronounced calibration issues for young Hispanic males and older Black females - groups that would be missed by examining either race, age, or gender separately.

Solution Implementation

To address these calibration disparities, the team implemented a comprehensive approach:

- Calibration Method Selection: After testing multiple approaches, they selected isotonic regression as the primary calibration technique due to its flexibility in handling the non-linear miscalibration patterns observed across different risk ranges. Separate isotonic regression models were fitted for each racial group using a dedicated calibration dataset.

-

Implementation Process:

-

They divided their validation data into a calibration-training set (70%) and a calibration-testing set (30%).

- For each demographic group, they fitted isotonic regression models mapping raw model scores to observed recidivism rates.

- They implemented an efficient lookup system that applied the appropriate transformation based on demographic information.

-

They developed a special handling procedure for individuals belonging to groups with limited representation in the data.

-

Intersectionality Consideration:

-

They extended the calibration approach to consider intersections of race, gender, and age, creating specific calibration curves for key intersectional groups.

-

For intersectional groups with limited samples, they implemented a hierarchical borrowing approach that leveraged information from related groups.

-

Trade-off Navigation:

-

They explicitly documented how calibration improvements affected other fairness metrics, including the modest reduction in demographic parity after calibration.

- They engaged stakeholders to establish that in this context, consistent interpretation of risk scores across groups took priority over perfect equalization of detention rates.

Throughout implementation, they maintained careful documentation of calibration decisions, transformation functions, and performance metrics to ensure transparency and auditability.

Outcomes and Lessons

The calibration intervention resulted in several key improvements:

- Expected Calibration Error dropped from an average of 0.08 to 0.03 across racial groups, with the largest improvements for Hispanic defendants.

- Reliability diagrams showed much more consistent alignment between predicted probabilities and observed frequencies across all groups.

- Decision consistency improved, with risk scores now representing similar actual risk regardless of demographic group.

Key challenges remained, including the moderate tension between perfect calibration and equal detention rates, as well as the need for larger samples to improve calibration for some intersectional groups.

The most generalizable lessons included:

- The importance of examining calibration as a distinct fairness dimension, as models can achieve similar accuracy while exhibiting significant calibration disparities.

- The value of group-specific calibration approaches in addressing interpretation inconsistencies that threshold adjustments alone cannot fix.

- The necessity of making explicit, documented choices about which fairness properties to prioritize when mathematical impossibilities prevent satisfying all criteria simultaneously.

These insights directly inform the Post-processing Calibration Guide, particularly in establishing when calibration should take precedence over other fairness properties and which techniques work best for different miscalibration patterns.

5. Frequently Asked Questions

FAQ 1: Calibration Vs. Other Fairness Metrics

Q: How should I determine whether to prioritize calibration over other fairness criteria like equal false positive rates?

A: This critical decision depends on your application context and the specific ways your model outputs are used. Prioritize calibration when: (1) The raw probability scores themselves drive decisions or are directly presented to users - especially when different thresholds might be applied by different decision-makers; (2) The interpretation consistency of risk scores is ethically paramount, such as in medical prognosis where treatment decisions depend on accurate risk assessment; or (3) Legal or regulatory requirements explicitly mandate calibration across groups. Conversely, prioritize other fairness metrics when: (1) Your system makes binary decisions with fixed thresholds where error type balance matters more than probability interpretation; (2) Historical patterns of discrimination in your domain have created specific error imbalances that must be addressed; or (3) Stakeholders have explicitly prioritized error rate parity over calibration. Document this decision process carefully, acknowledging that in many real-world scenarios, you'll need to balance multiple fairness criteria rather than perfectly satisfying any single one. The mathematical impossibility results proven by Kleinberg et al. (2016) mean this trade-off is unavoidable whenever base rates differ between groups - making explicit, principled prioritization essential.

FAQ 2: Calibration Without Protected Attributes

Q: How can I implement calibration across groups when protected attributes are unavailable during deployment?

A: While having protected attributes available enables the most direct group-specific calibration, you can still improve calibration without them through several approaches: First, consider using proxy variables that correlate with protected attributes but are permissible to use. For example, geography might serve as a legal proxy for demographics in some applications. Second, implement "multiaccuracy" approaches that identify subgroups with calibration issues without explicitly using protected attributes, as proposed by Kim et al. (2019). These methods search for any identifiable subgroups with miscalibration and correct them, indirectly addressing demographic disparities. Third, use distributionally robust optimization techniques during training that improve worst-case calibration across potential subgroups. Finally, consider implementing ensemble approaches that apply multiple calibration transformations and aggregate the results, which can improve overall calibration without group identification. While these approaches typically produce smaller calibration improvements than direct group-specific methods, they represent practical alternatives when protected attributes are unavailable or restricted. Document whatever approach you choose and its limitations, acknowledging that perfect calibration across groups is challenging without group identification.

6. Project Component Development

Component Description

In Unit 5, you will develop the calibration methodology section of the Post-processing Calibration Guide. This component will provide a structured approach for identifying calibration disparities across demographic groups and implementing appropriate calibration techniques based on model characteristics and application requirements.

The deliverable will include calibration assessment methodologies, technique selection frameworks, implementation patterns, and evaluation approaches that ensure probability outputs have consistent meaning across demographic groups.

Development Steps

- Create a Calibration Assessment Framework: Develop an approach for measuring calibration quality across demographic groups, including appropriate metrics, visualization techniques, and statistical tests for identifying significant disparities.

- Design a Calibration Technique Selection Guide: Build a decision framework for selecting appropriate calibration methods based on model type, data characteristics, and miscalibration patterns.

- Develop Implementation Templates: Create reusable implementation patterns for common calibration techniques, including code structures, data management approaches, and edge case handling strategies.

Integration Approach

This calibration component will interface with other parts of the Post-processing Calibration Guide by:

- Building on the threshold optimization techniques from Unit 1, providing calibrated probabilities that can then be used with optimal thresholds.

- Establishing foundations for the prediction transformation methods in Unit 3, which will extend these approaches to more general transformations.

- Creating implementation patterns that will be incorporated into the comprehensive guide in Unit 5.

For successful integration, document how calibration complements rather than replaces threshold optimization, establish clear handoffs between calibration and other post-processing techniques, and create consistent evaluation methodologies across all components.

7. Summary and Next Steps

Key Takeaways

This Unit has established the critical importance of calibration across demographic groups as a distinct fairness dimension. You've learned that calibration ensures probability scores have consistent meaning regardless of group membership, creating fundamental fairness in how model outputs are interpreted. Key concepts include:

- Calibration as a Fairness Criterion: Consistent probability interpretation across groups represents a distinct fairness property that may require specific intervention.

- Group-Specific Calibration Techniques: Practical approaches like Platt scaling, isotonic regression, and temperature scaling can address calibration disparities when applied separately to each demographic group.

- Calibration Evaluation Metrics: Specialized measures like Expected Calibration Error (ECE) and reliability diagrams provide quantitative assessment of calibration quality across groups.

- The Calibration-Fairness Trade-off: Mathematical impossibility results create unavoidable tensions between calibration and other fairness criteria, requiring context-specific prioritization.

These concepts directly address our guiding questions by explaining how to ensure consistent probability interpretation and how to navigate the inevitable trade-offs with other fairness properties.

Application Guidance

To apply these concepts in your practical work:

- Start by systematically measuring calibration quality across demographic groups using the evaluation metrics discussed in this Unit. Generate reliability diagrams to visualize miscalibration patterns.

- Select appropriate calibration techniques based on your model type and the specific miscalibration patterns observed. Implement separate calibration transformations for each demographic group.

- Evaluate how calibration improvements affect other fairness metrics, making explicit, documented choices about which properties to prioritize based on your application context.

- Implement monitoring systems to track calibration quality over time, as data distributions may shift and require recalibration.

For organizations new to these approaches, start with simpler techniques like Platt scaling before advancing to more complex methods. Focus initial efforts on the demographic groups and probability ranges where miscalibration has the greatest impact on decisions.

Looking Ahead

In the next Unit, we will build on this foundation by exploring more general prediction transformation methods that go beyond calibration. While calibration focuses specifically on aligning predicted probabilities with empirical outcomes, Unit 3 will examine broader transformation approaches that can implement various fairness criteria through direct modification of model outputs.

The calibration techniques you've learned here provide an important foundation for these more general transformations. By understanding how to adjust probabilities to ensure consistent interpretation, you're now prepared to learn more flexible approaches that can satisfy multiple fairness criteria simultaneously through learned transformations.

References

Corbett-Davies, S., & Goel, S. (2018). The measure and mismeasure of fairness: A critical review of fair machine learning. arXiv preprint arXiv:1808.00023.

Guo, C., Pleiss, G., Sun, Y., & Weinberger, K. Q. (2017). On calibration of modern neural networks. In Proceedings of the 34th International Conference on Machine Learning (pp. 1321-1330).

Kim, M. P., Ghorbani, A., & Zou, J. (2019). Multiaccuracy: Black-box post-processing for fairness in classification. In Proceedings of the 2019 AAAI/ACM Conference on AI, Ethics, and Society (pp. 247-254).

Kleinberg, J., Mullainathan, S., & Raghavan, M. (2016). Inherent trade-offs in the fair determination of risk scores. arXiv preprint arXiv:1609.05807.

Kull, M., Silva Filho, T. M., & Flach, P. (2017). Beta calibration: a well-founded and easily implemented improvement on logistic calibration for binary classifiers. In Artificial Intelligence and Statistics (pp. 623-631).

Kumar, A., Liang, P. S., & Ma, T. (2019). Verified uncertainty calibration. In Advances in Neural Information Processing Systems (pp. 3792-3803).

Naeini, M. P., Cooper, G. F., & Hauskrecht, M. (2015). Obtaining well calibrated probabilities using Bayesian binning. In Proceedings of the AAAI Conference on Artificial Intelligence (Vol. 29, No. 1).

Platt, J. (1999). Probabilistic outputs for support vector machines and comparisons to regularized likelihood methods. Advances in Large Margin Classifiers, 10(3), 61-74.

Pleiss, G., Raghavan, M., Wu, F., Kleinberg, J., & Weinberger, K. Q. (2017). On fairness and calibration. In Advances in Neural Information Processing Systems (pp. 5680-5689).

Zadrozny, B., & Elkan, C. (2002). Transforming classifier scores into accurate multiclass probability estimates. In Proceedings of the 8th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (pp. 694-699).

Unit 3

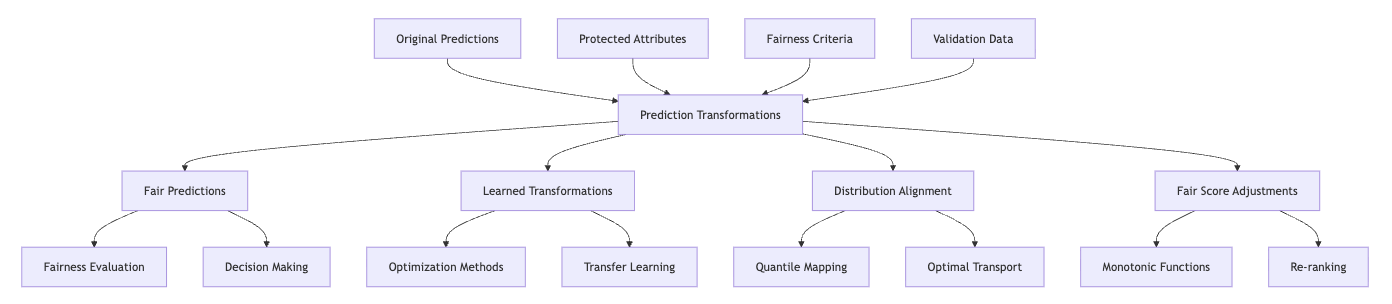

Unit 3: Prediction Transformation Methods

1. Conceptual Foundation and Relevance

Guiding Questions

- Question 1: How can we transform model outputs to satisfy fairness constraints while minimizing information loss from the original predictions?

- Question 2: What techniques enable us to implement complex fairness criteria through direct output modifications when we cannot retrain the model or lack access to its internal workings?

Conceptual Context

Prediction transformation methods represent a powerful extension of basic threshold adjustments, offering more flexible and nuanced approaches to post-processing fairness interventions. While threshold optimization (Unit 1) works well for binary decisions and calibration (Unit 2) addresses probability consistency, many fairness scenarios require more sophisticated output transformations that preserve the information content of predictions while satisfying complex fairness criteria.

These methods matter because they enable fairness interventions in scenarios where you cannot modify the training data or model architecture, such as when working with third-party systems, pre-trained models, or legacy deployments. As Dwork et al. (2018) note, prediction transformations "decouple the predictor from the fairness criteria," allowing you to implement fairness requirements without access to the model internals.

This Unit builds directly on the threshold adjustments from Unit 1 and the calibration techniques from Unit 2, extending them to more general transformations with greater flexibility and expressive power. The approaches you learn here will directly inform the Post-processing Calibration Guide you'll develop in Unit 5, particularly for scenarios requiring complex fairness interventions beyond simple threshold shifts or probability recalibration.

2. Key Concepts

Learned Transformation Functions

Unlike simple threshold adjustments or standard calibration techniques that follow predetermined forms, learned transformation functions discover optimal mappings from original predictions to fair outputs based on validation data. This approach enables more flexible interventions that can address complex fairness requirements while preserving predictive information.

Learned transformations interact with threshold optimization by generalizing the concept of group-specific decision boundaries to more complex functions. Where threshold adjustment applies a fixed shift to different groups, learned transformations can implement non-linear mappings that vary based on both group membership and prediction values. This increased flexibility helps balance fairness criteria with prediction quality.

Canetti et al. (2019) demonstrated this approach through transformation functions learned from validation data to satisfy fairness constraints while minimizing distortion from the original predictions. Their research showed that learned transformations achieved better fairness-utility trade-offs than simpler approaches by tailoring the transformation specifically to the observed data patterns.

The key implementation approaches include: